docker 安装

¶自主安装

VM工具:virtualbox

VM自动构建工具:Vagrant

# 创建一个文件夹,这个文件夹就是一个 VM 的家,每一个VM一个家目录,家目录下有一个配置文件

vagrant --help

vagrant version

vagrant init centos/7 # 初始化一个centos7的配置文件

vagrant up # 基于当前目录中的配置文件进行安装VM

vagrant ssh # 进入刚创建的虚拟机里面

vagrant status # 查看VM运行状态

vagrant halt # 停止刚创建的虚机

vargrant destroy # 删除刚创建的虚机

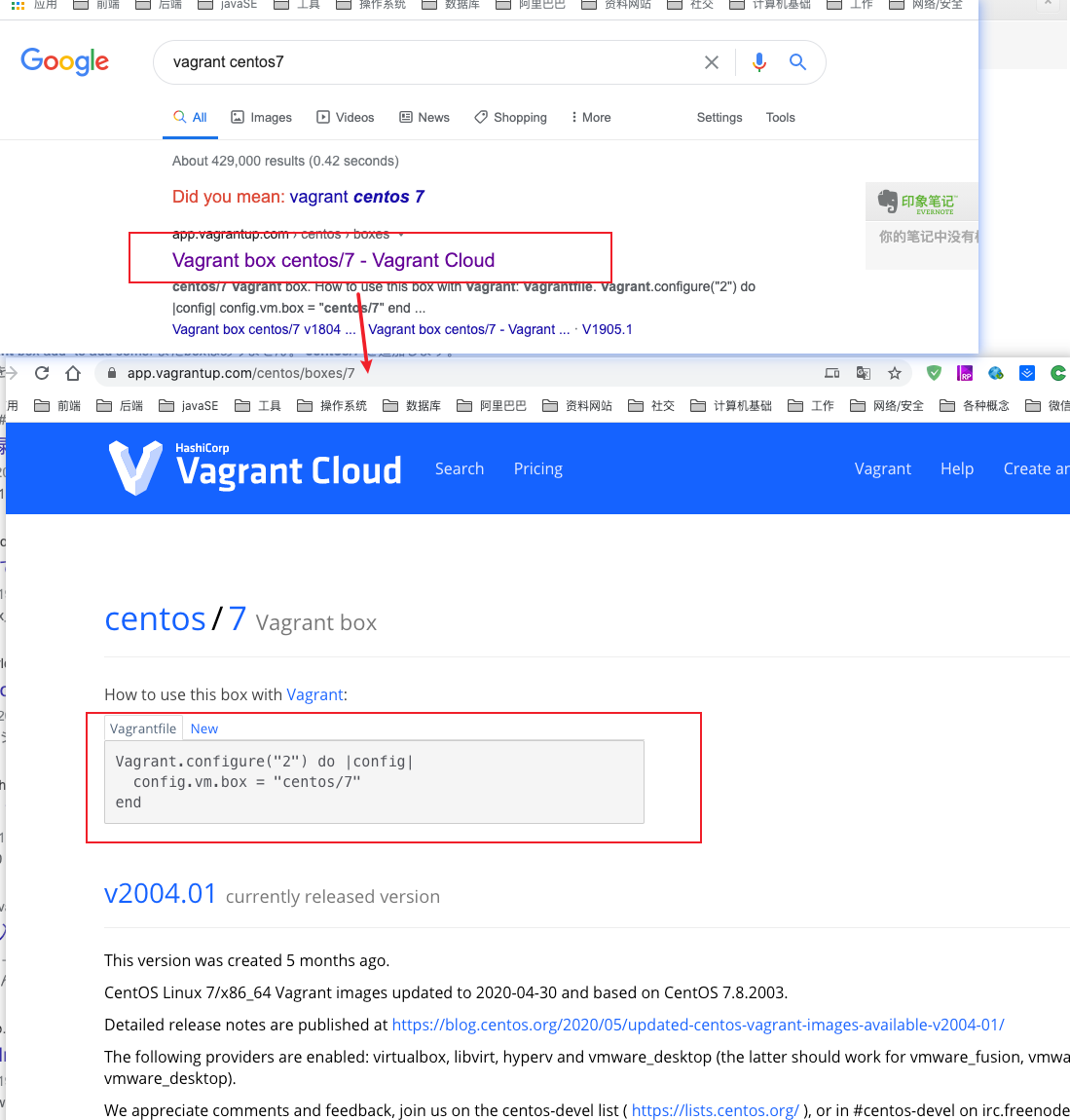

一般想安装某个版本的VM直接google可以找到已经写好的配置文件,例如可以安转 CentOS 7,直接 google “vagrant centos7”,一般第一个网页就是 “https://app.vagrantup.com/” 的URL,点进去就可以得到相关的信息:

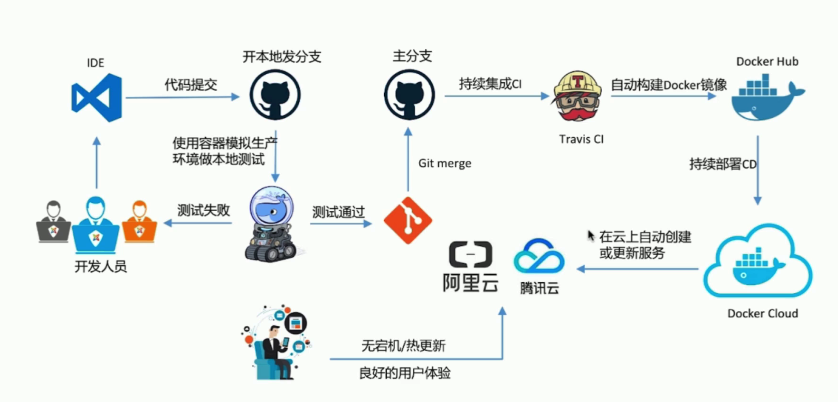

¶docker machine

mac 和 windows 安装之后自带了 docker machine,他是一个自动管理 docker 自身安装的工具,同时像阿里云,微软云等厂商都基于 docker machine 提供了自动创建一台带有 docker 云主机的功能,搜索 “docker machine aliyun” 相关即可。

¶docker playground

有一个组织在维护的很多已经装了 docker 的云主机,用户在 web 上可以临时地申请一台通过 web 终端连接使用。

vagrant问题

¶无法切换到 root 用户

sudo su # vagrant默认没有设置root用户密码,使用这个命令可以切换到root用户然后修改root密码

passwd root

¶无法远程 ssh

遇到报错

ssh failed Permission denied (publickey,gssapi-keyex,gssapi-with-mic).

配置 /etc/ssh/sshd_config

PasswordAuthentication yes

然后 service sshd restart

¶mount 目录失败

配置config.vm.synced_folder "labs", "/home/vagrant/labs"报以下错误:

mount: unknown filesystem type 'vboxsf'

解决方案:

vagrant plugin install vagrant-vbguest

vagrant destroy && vagrant up

¶guest additions 问题

因为下载的 centos box 里面没有 virtualbox guest additions,需要下载一个携带该软件的 box :https://app.vagrantup.com/geerlingguy/boxes/centos7/versions/1.2.24

安装 docker 的问题

¶解决官网yum安装docker慢

替换

$ sudo yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

为

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

¶宿主机和容器突然都不能联网

发现是 DNS 服务有问题,无法解析域名,ip可以 ping 通。修改 /etc/resolv.conf 文件,把以下插入到最前面:

nameserver 223.5.5.5

nameserver 223.6.6.6

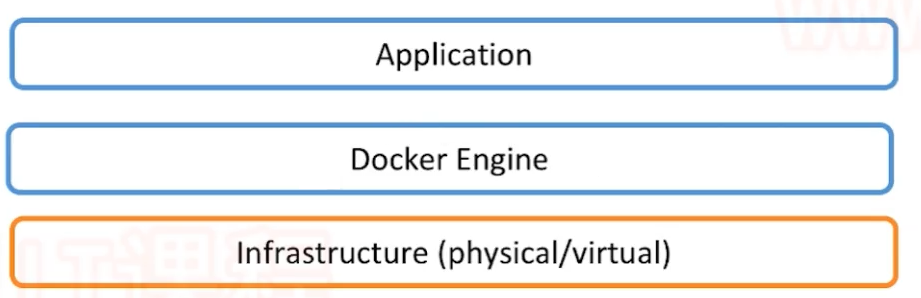

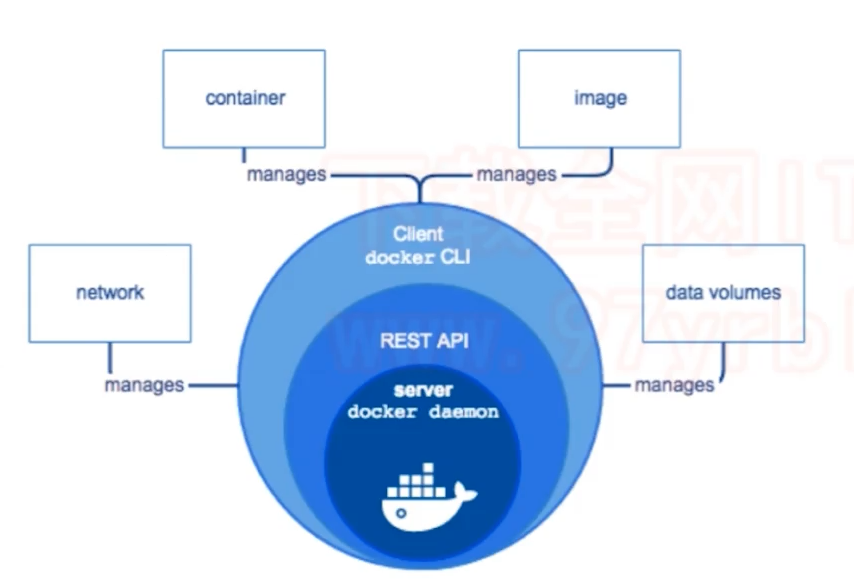

Docker 架构

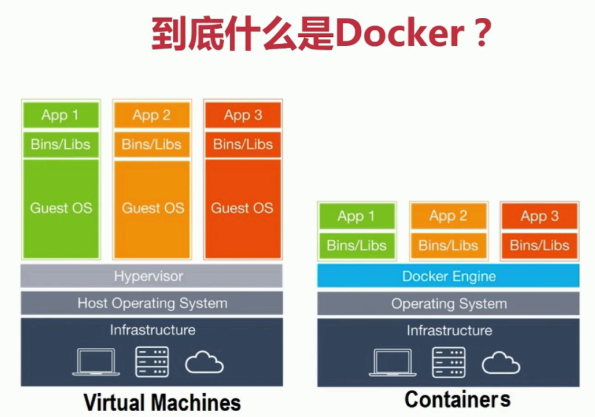

Docker 提供了一个开发、打包、运行 app 的平台,把 app 和底层 infrastructure 隔离开来。其中 Docker Engine 就是核心组件。

¶Docker Engine

Docker Engine 为 C/S 架构,分为三部分:

- 后台进程(dockerd)

- REST API Server

- CLI 接口(docker),通过 REST API Server 和 dockerd 通信

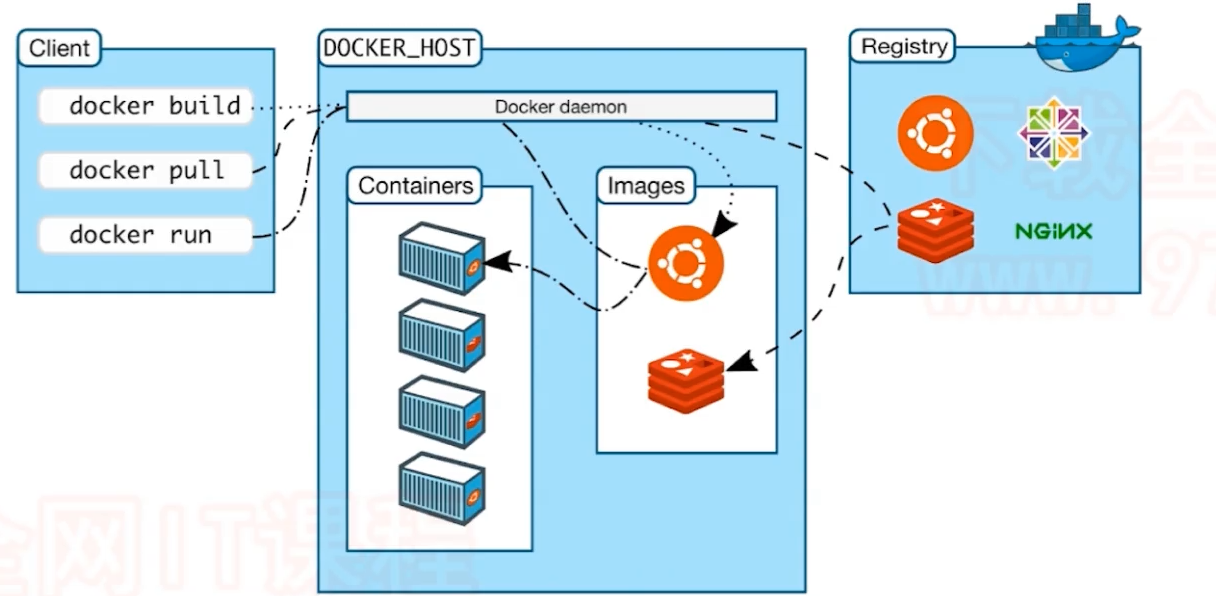

¶Docker 总体架构图

一个 Client 连接到一个安装了 Dockerd 的 DOCKER_HOST 主机上进行相关操作,如拉取镜像,运行容器等。

¶底层技术支持

-

Namespaces:做隔离 pid、net、ipc、mnt、uts

-

Control groups:做资源限制

-

Union file systems:Container 和 image 的分层

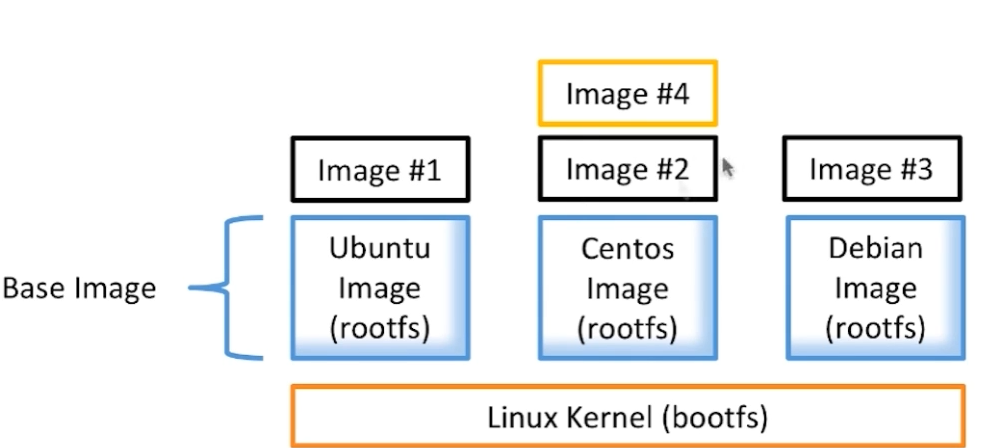

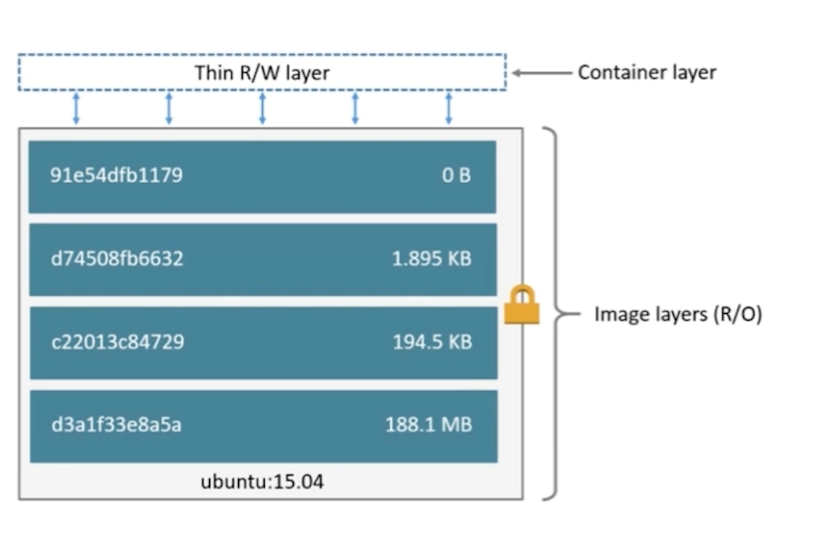

¶Docker Image

- 文件和 meta data 的集合

- 分层的,并且每一层都可以添加改变删除文件,称为一个新的image

- 不同的image可以共享相同的基础layer

- Image 本身是 read-only 的

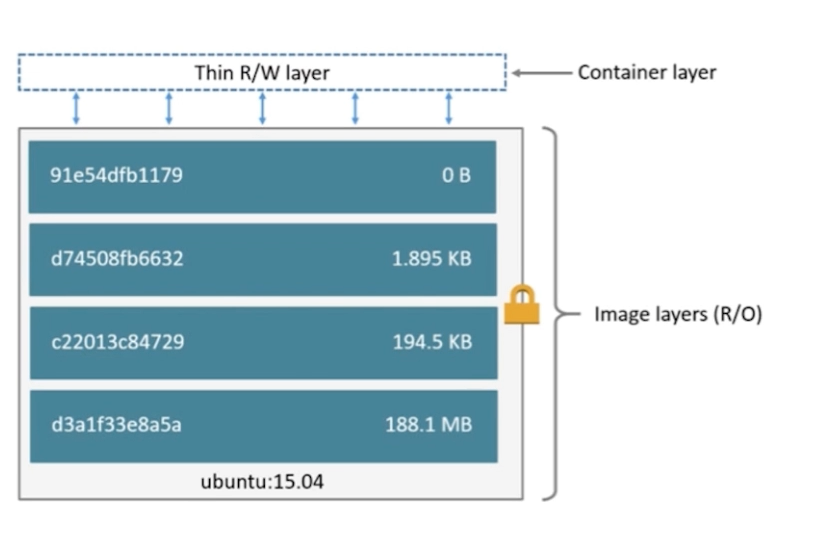

¶Docker Container

- 通过 Image 创建(copy)

- 在 Image layer 之上建立一个 container layer(可读写)

- 类比面向对象:类和实例

- Image 负责 app 的存储和分发,Container 负责运行 app

Docker 网络

¶Linux 网络命令空间(network namespace)

¶查看 docker 容器的网络接口

先创建两个容器,test1和test2:

[root@docker-node1 ~]# docker run -d --name test1 busybox /bin/sh -c "while true;do sleep 3600; done"

Unable to find image 'busybox:latest' locally

latest: Pulling from library/busybox

9758c28807f2: Already exists

Digest: sha256:a9286defaba7b3a519d585ba0e37d0b2cbee74ebfe590960b0b1d6a5e97d1e1d

Status: Downloaded newer image for busybox:latest

040676f4f47f3fb22bc657099e017a884cc79b045cc67ba1732c3681042568b8

[root@docker-node1 ~]# docker run -d --name test2 busybox /bin/sh -c "while true;do sleep 3600; done"

2c38e69e55e481683c79eceda32f033e5c34e028581f203189671fdf32ace443

进入容器 test1,执行 ip a 查看网络接口情况,可以看到回环端口和一个名为 eth0@if6的端口。序号分别为1和5:

[root@docker-node1 ~]# docker exec -it test1 /bin/sh

/ # ip a

1: lo: mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

5: eth0@if6: mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

进入容器 test2,执行 ip a 查看网络接口情况,可以看到回环端口和一个名为 eth0@if8的端口。序号分别为1和7:

/ # exit

[root@docker-node1 ~]# docker exec -it test2 /bin/sh

/ # ip a

1: lo: mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

7: eth0@if8: mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

两个容器的 eth 端口网段是一样的,可以相互 ping 通

/ # ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2): 56 data bytes

64 bytes from 172.17.0.2: seq=0 ttl=64 time=0.141 ms

64 bytes from 172.17.0.2: seq=1 ttl=64 time=0.685 ms

^C

--- 172.17.0.2 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.141/0.413/0.685 ms

/ # exit

[root@docker-node1 ~]#

退出后在宿主机执行 ip a 查看网络端口,发现看不到两个容器中的 eth 端口 5 和 7。同时看到 docker0 端口的网段和两个容器是一样的:

[root@docker-node1 ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:4d:77:d3 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 brd 10.0.2.255 scope global noprefixroute dynamic eth0

valid_lft 81204sec preferred_lft 81204sec

inet6 fe80::5054:ff:fe4d:77d3/64 scope link

valid_lft forever preferred_lft forever

3: eth1: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:e6:45:bc brd ff:ff:ff:ff:ff:ff

inet 192.168.205.10/24 brd 192.168.205.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fee6:45bc/64 scope link

valid_lft forever preferred_lft forever

4: docker0: mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:25:db:49:63 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:25ff:fedb:4963/64 scope link

valid_lft forever preferred_lft forever

6: veth2aaf7ce@if5: mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether ea:87:9c:ff:2b:ae brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::e887:9cff:feff:2bae/64 scope link

valid_lft forever preferred_lft forever

8: vethf1db1bd@if7: mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 62:b7:11:da:66:56 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::60b7:11ff:feda:6656/64 scope link

valid_lft forever preferred_lft forever

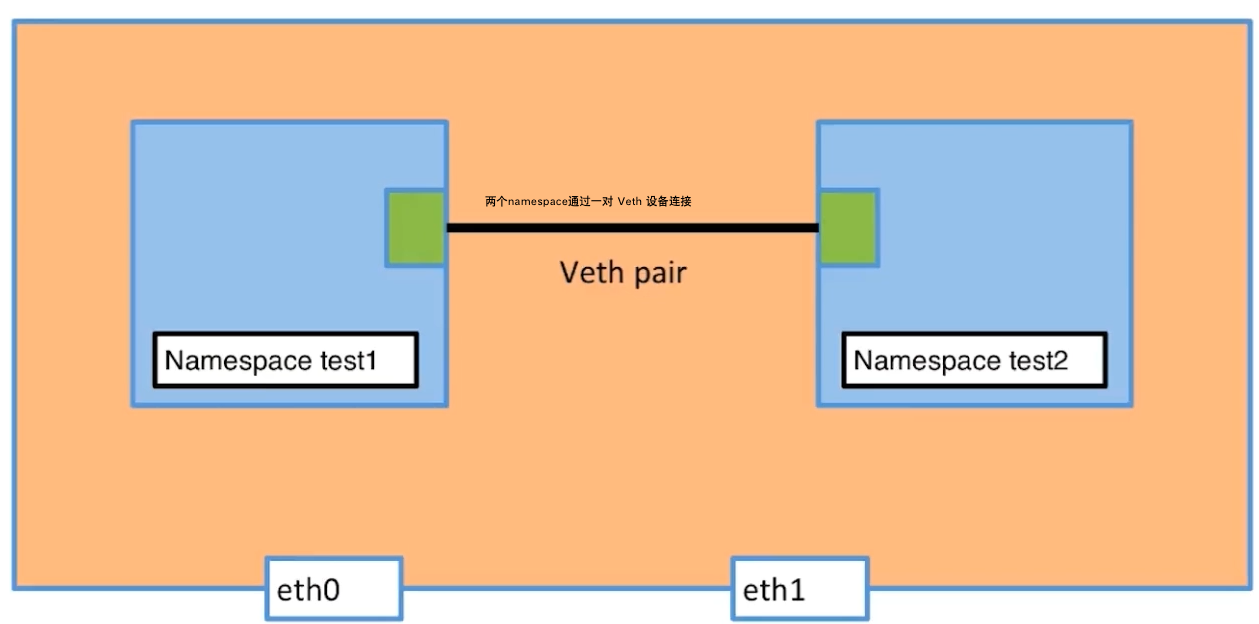

¶使用 linux 命令操作网络命名空间

添加、查看、删除网络命名空间

[root@docker-node1 ~]# ip netns add test1

[root@docker-node1 ~]# ip netns add test2

[root@docker-node1 ~]# ip netns add test3

[root@docker-node1 ~]# ip netns list

test3

test2

test1

[root@docker-node1 ~]# ip netns delete test3

[root@docker-node1 ~]# ip netns list

test2

test1

查看 test1 命名空间下的网络接口,发现只有一个回环接口,而且状态是 DOWN 的,也没有 ip 地址:

[root@docker-node1 ~]# ip netns exec test1 ip a

1: lo: mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:0

查看 test1 命名空间下的 link

[root@docker-node1 ~]# ip netns exec test1 ip link

1: lo: mtu 65536 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

查看宿主机下的 link

[root@docker-node1 ~]# ip link

1: lo: mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 52:54:00:4d:77:d3 brd ff:ff:ff:ff:ff:ff

3: eth1: mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 08:00:27:e6:45:bc brd ff:ff:ff:ff:ff:ff

4: docker0: mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:25:db:49:63 brd ff:ff:ff:ff:ff:ff

6: veth2aaf7ce@if5: mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether ea:87:9c:ff:2b:ae brd ff:ff:ff:ff:ff:ff link-netnsid 0

8: vethf1db1bd@if7: mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether 62:b7:11:da:66:56 brd ff:ff:ff:ff:ff:ff link-netnsid 1

添加一对 Veth 设备,veth-test1 和 veth-test2,它们的序号为 12 和 13,都有MAC地址,分别为3e:c1:1a:1d:37:3c和2a:d1:1d:4b:9f:6c,状态state都是DOWN。

[root@docker-node1 ~]# ip link add veth-test1 type veth peer name veth-test2

[root@docker-node1 ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:4d:77:d3 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 brd 10.0.2.255 scope global noprefixroute dynamic eth0

valid_lft 76155sec preferred_lft 76155sec

inet6 fe80::5054:ff:fe4d:77d3/64 scope link

valid_lft forever preferred_lft forever

3: eth1: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:e6:45:bc brd ff:ff:ff:ff:ff:ff

inet 192.168.205.10/24 brd 192.168.205.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fee6:45bc/64 scope link

valid_lft forever preferred_lft forever

4: docker0: mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:25:db:49:63 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:25ff:fedb:4963/64 scope link

valid_lft forever preferred_lft forever

6: veth2aaf7ce@if5: mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether ea:87:9c:ff:2b:ae brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::e887:9cff:feff:2bae/64 scope link

valid_lft forever preferred_lft forever

8: vethf1db1bd@if7: mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 62:b7:11:da:66:56 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::60b7:11ff:feda:6656/64 scope link

valid_lft forever preferred_lft forever

12: veth-test2@veth-test1: mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 3e:c1:1a:1d:37:3c brd ff:ff:ff:ff:ff:ff

13: veth-test1@veth-test2: mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 2a:d1:1d:4b:9f:6c brd ff:ff:ff:ff:ff:ff

把这对 Veth 设备的两端分别添加到命名空间 test1 和 test2 中,可以看到两个命名空间中分别多了一条link,而宿主机中的12和13号设备看不到了。

[root@docker-node1 ~]# ip link set veth-test1 netns test1

[root@docker-node1 ~]# ip netns exec test1 ip link

1: lo: mtu 65536 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

13: veth-test1@if12: mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 2a:d1:1d:4b:9f:6c brd ff:ff:ff:ff:ff:ff link-netnsid 0

[root@docker-node1 ~]# ip link set veth-test2 netns test2

[root@docker-node1 ~]# ip netns exec test2 ip link

1: lo: mtu 65536 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

12: veth-test2@if13: mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 3e:c1:1a:1d:37:3c brd ff:ff:ff:ff:ff:ff link-netnsid 0

[root@docker-node1 ~]# ip link

1: lo: mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 52:54:00:4d:77:d3 brd ff:ff:ff:ff:ff:ff

3: eth1: mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 08:00:27:e6:45:bc brd ff:ff:ff:ff:ff:ff

4: docker0: mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:25:db:49:63 brd ff:ff:ff:ff:ff:ff

6: veth2aaf7ce@if5: mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether ea:87:9c:ff:2b:ae brd ff:ff:ff:ff:ff:ff link-netnsid 0

8: vethf1db1bd@if7: mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether 62:b7:11:da:66:56 brd ff:ff:ff:ff:ff:ff link-netnsid 1

给两个 Veth 设备分别添加 ip 地址

[root@docker-node1 ~]# ip netns exec test1 ip addr add 192.168.1.1/24 dev veth-test1

[root@docker-node1 ~]# ip netns exec test2 ip addr add 192.168.1.2/24 dev veth-test2

[root@docker-node1 ~]# ip netns exec test1 ip a

1: lo: mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

13: veth-test1@if12: mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 2a:d1:1d:4b:9f:6c brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet 192.168.1.1/24 scope global veth-test1

valid_lft forever preferred_lft forever

[root@docker-node1 ~]# ip netns exec test2 ip a

1: lo: mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

12: veth-test2@if13: mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 3e:c1:1a:1d:37:3c brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.1.2/24 scope global veth-test2

valid_lft forever preferred_lft forever

启用两个 Veth 设备:

[root@docker-node1 ~]# ip netns exec test1 ip link set dev veth-test1 up

[root@docker-node1 ~]# ip netns exec test2 ip link set dev veth-test2 up

[root@docker-node1 ~]# ip netns exec test1 ip a

1: lo: mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

13: veth-test1@if12: mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 2a:d1:1d:4b:9f:6c brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet 192.168.1.1/24 scope global veth-test1

valid_lft forever preferred_lft forever

inet6 fe80::28d1:1dff:fe4b:9f6c/64 scope link

valid_lft forever preferred_lft forever

[root@docker-node1 ~]# ip netns exec test2 ip a

1: lo: mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

12: veth-test2@if13: mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 3e:c1:1a:1d:37:3c brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.1.2/24 scope global veth-test2

valid_lft forever preferred_lft forever

inet6 fe80::3cc1:1aff:fe1d:373c/64 scope link

valid_lft forever preferred_lft forever

在 test1 命名空间中 ping test2 的ip 地址,是连通的:

[root@docker-node1 ~]# ip netns exec test1 ping 192.168.1.2

PING 192.168.1.2 (192.168.1.2) 56(84) bytes of data.

64 bytes from 192.168.1.2: icmp_seq=1 ttl=64 time=0.065 ms

64 bytes from 192.168.1.2: icmp_seq=2 ttl=64 time=0.058 ms

^C

--- 192.168.1.2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 999ms

rtt min/avg/max/mdev = 0.058/0.061/0.065/0.008 ms

[root@docker-node1 ~]#

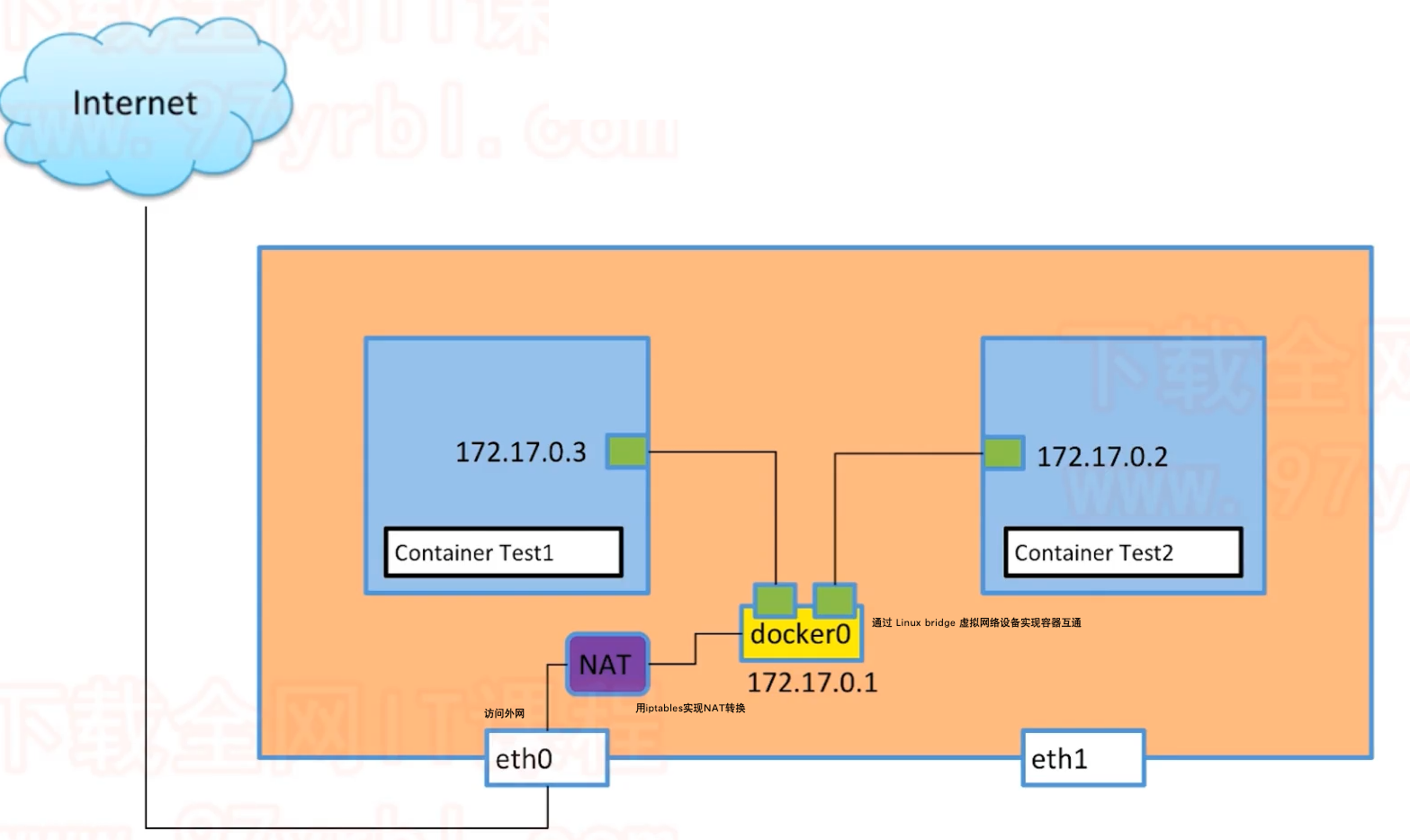

¶容器互通和连接公网

查看 docker 网络情况,有名为 bridge、host、none 的网络,DRIVER 分别为 bridge、host、null

[root@docker-node1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

c89adfd37eb3 bridge bridge local

dac2514ae55c host host local

10ac2277dbd2 none null local

目前有一个 docker 容器在运行

[root@docker-node1 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

040676f4f47f busybox "/bin/sh -c 'while t…" 2 hours ago Up 2 hours test1

然后我们通过 inspect 命令查看上面的 bridge 网络,可以发现在 Containers 条目中,包含了上面列举的唯一的容器,也就是说这个容器的网络是连接在 bridge 上面的。

[root@docker-node1 ~]# docker network inspect c89adfd37eb3

[

{

"Name": "bridge",

"Id": "c89adfd37eb397f31279e63c4eae8c28d01fe85019d32f6244be3af53e3daad6",

"Created": "2020-10-19T05:56:27.683543536Z",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"040676f4f47f3fb22bc657099e017a884cc79b045cc67ba1732c3681042568b8": {

"Name": "test1",

"EndpointID": "690b3d311fd769eae58091cfd94c6f9575a84720143a76d73d9bb6c268175442",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

再次在宿主机上查询网络接口,可以看到 4 号设备名为 docker0,6 号设备名为 veth2aaf7ce@if5,其实 docker0 就是一个 bridge 设备,而 veth2aaf7ce@if5 是一个 Veth 设备,一对 Veth 是通过连接到同一个 bridge 上实现互通的(类比现实世界中两条主机的物理接口通过网线连接到同一个路由器或者交换机),所以可以推测,该 Veth 设备的另一端就是位于 test1 容器的 network namespace 中的 eth0@if6。

[root@docker-node1 ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:4d:77:d3 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 brd 10.0.2.255 scope global noprefixroute dynamic eth0

valid_lft 74159sec preferred_lft 74159sec

inet6 fe80::5054:ff:fe4d:77d3/64 scope link

valid_lft forever preferred_lft forever

3: eth1: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:e6:45:bc brd ff:ff:ff:ff:ff:ff

inet 192.168.205.10/24 brd 192.168.205.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fee6:45bc/64 scope link

valid_lft forever preferred_lft forever

4: docker0: mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:25:db:49:63 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:25ff:fedb:4963/64 scope link

valid_lft forever preferred_lft forever

6: veth2aaf7ce@if5: mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether ea:87:9c:ff:2b:ae brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::e887:9cff:feff:2bae/64 scope link

valid_lft forever preferred_lft forever

[root@docker-node1 ~]# docker exec test1 ip a

1: lo: mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

5: eth0@if6: mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

通过 brctl 工具证实 veth2aaf7ce@if5 是连接在 docker0 上的:

[root@docker-node1 ~]# yum install bridge-utils

[root@docker-node1 ~]# brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.024225db4963 no veth2aaf7ce

再增加一个容器的时候,可以看到 docker network inspect bridge 的 Containers 条目多了一个容器信息, ip a 也多了一个 Veth 设备 veth3811212@if14,brctl show 也看到了 bridge 多了一个端口。

[root@docker-node1 ~]# docker run -d --name test2 busybox /bin/sh -c "while true;do sleep 3600; done"

97c2b77265324f16be66f5ae02eaed3c8175ebc78acc9d5e0e7c3ea0f3d271bd

[root@docker-node1 ~]# docker network inspect bridge

[

{

"Name": "bridge",

"Id": "c89adfd37eb397f31279e63c4eae8c28d01fe85019d32f6244be3af53e3daad6",

"Created": "2020-10-19T05:56:27.683543536Z",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"040676f4f47f3fb22bc657099e017a884cc79b045cc67ba1732c3681042568b8": {

"Name": "test1",

"EndpointID": "690b3d311fd769eae58091cfd94c6f9575a84720143a76d73d9bb6c268175442",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

},

"97c2b77265324f16be66f5ae02eaed3c8175ebc78acc9d5e0e7c3ea0f3d271bd": {

"Name": "test2",

"EndpointID": "3a755087b8b7ea5bbdcd807dcf7230e37fcfdef91e70cbebdfecf0a2e8086354",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

[root@docker-node1 ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:4d:77:d3 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 brd 10.0.2.255 scope global noprefixroute dynamic eth0

valid_lft 73701sec preferred_lft 73701sec

inet6 fe80::5054:ff:fe4d:77d3/64 scope link

valid_lft forever preferred_lft forever

3: eth1: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:e6:45:bc brd ff:ff:ff:ff:ff:ff

inet 192.168.205.10/24 brd 192.168.205.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fee6:45bc/64 scope link

valid_lft forever preferred_lft forever

4: docker0: mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:25:db:49:63 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:25ff:fedb:4963/64 scope link

valid_lft forever preferred_lft forever

6: veth2aaf7ce@if5: mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether ea:87:9c:ff:2b:ae brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::e887:9cff:feff:2bae/64 scope link

valid_lft forever preferred_lft forever

15: veth3811212@if14: mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether ae:bf:e2:b8:d5:af brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::acbf:e2ff:feb8:d5af/64 scope link

valid_lft forever preferred_lft forever

[root@docker-node1 ~]# brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.024225db4963 no veth2aaf7ce

veth3811212

¶–link 和自定义 bridge 网络

¶–link

运行两个容器,test2 link 到 test1 上

[root@docker-node1 ~]# docker run -d --name test1 busybox /bin/sh -c "while true;do sleep 3600;done"

401c4b231a5dbe1ea66e44152b08b15aab088fb1551ccdb447658b8b61cb9892

[root@docker-node1 ~]# docker run -d --name test2 --link test1:alias busybox /bin/sh -c "while true;do sleep 3600;done"

7c531b6d6cde4bc5578c73867a3667acdc09a2ae49583f69a1a528b5246b1a4f

查看 ip 地址

[root@docker-node1 ~]# docker exec test1 ip a

1: lo: mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

30: eth0@if31: mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[root@docker-node1 ~]# docker exec test2 ip a

1: lo: mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

32: eth0@if33: mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

在 test1 上可以 ping 通 test2 的ip,但是不能 ping 它的名称:

[root@docker-node1 ~]# docker exec -it test1 /bin/sh

/ # ping 172.17.0.3

PING 172.17.0.3 (172.17.0.3): 56 data bytes

64 bytes from 172.17.0.3: seq=0 ttl=64 time=0.217 ms

64 bytes from 172.17.0.3: seq=1 ttl=64 time=0.084 ms

^C

--- 172.17.0.3 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.084/0.150/0.217 ms

/ # ping test2

ping: bad address 'test2'

/ # exit

在 test2 上可以 ping 通 test1 的名称和 link 指定的别名 “alias”

[root@docker-node1 ~]# docker exec -it test2 /bin/sh

/ # ping test1

PING test1 (172.17.0.2): 56 data bytes

64 bytes from 172.17.0.2: seq=0 ttl=64 time=0.075 ms

^C

--- test1 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.075/0.075/0.075 ms

/ #

/ # ping alias

PING alias (172.17.0.2): 56 data bytes

64 bytes from 172.17.0.2: seq=0 ttl=64 time=0.108 ms

^C

--- alias ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.108/0.108/0.108 ms

/ # exit

[root@docker-node1 ~]#

其实就是在 test2 中加入了关于 test1 的 DNS 信息到 test2 的本地中,方便 ip 不固定的时候进行访问。

¶自定义 bridge 网络

通过 docker network create 创建一个 driver 是 bridge 的网络,也就是 bridge 类型的网络设备,名称为 my-bridge。通过 brctl show 可以看到当前没有任何 Veth 接口连接到该设备

[root@docker-node1 ~]# docker network create -d bridge my-bridge

d3699cfbd400293e08c6c5a4d2da2f6d7226981b875d092bafd6f7d455f38921

[root@docker-node1 ~]# docker network list

NETWORK ID NAME DRIVER SCOPE

c89adfd37eb3 bridge bridge local

dac2514ae55c host host local

d3699cfbd400 my-bridge bridge local

10ac2277dbd2 none null local

[root@docker-node1 ~]# brctl show

bridge name bridge id STP enabled interfaces

br-d3699cfbd400 8000.0242432c6ca4 no

docker0 8000.024225db4963 no veth6ad4611

vethddc0a25

通过 --network 参数指定自建网络运行容器,此时自建 bridge 网络上多了一个接口 veth151f6f7,inspect network 上也出现了新运行的容器信息,ip 是一个和之前默认运行的两个容器网段不一样的 172.18 网段。

[root@docker-node1 ~]# docker run -d --name test3 --network my-bridge busybox /bin/sh -c "while true;do sleep 3600;done"

b2e3e66cad7dc1d07fa1e85961cc8bab7c74c76b3a41ec405faf437f051c2fc5

[root@docker-node1 ~]# brctl show

bridge name bridge id STP enabled interfaces

br-d3699cfbd400 8000.0242432c6ca4 no veth151f6f7

docker0 8000.024225db4963 no veth6ad4611

vethddc0a25

[root@docker-node1 ~]# docker network inspect my-bridge

[

{

"Name": "my-bridge",

"Id": "d3699cfbd400293e08c6c5a4d2da2f6d7226981b875d092bafd6f7d455f38921",

"Created": "2020-10-19T08:48:42.200451913Z",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.18.0.0/16",

"Gateway": "172.18.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"b2e3e66cad7dc1d07fa1e85961cc8bab7c74c76b3a41ec405faf437f051c2fc5": {

"Name": "test3",

"EndpointID": "9cf330bc93faf46fa0d869eeffc8bf549b37397c8f231ec323dd0a5bba826ead",

"MacAddress": "02:42:ac:12:00:02",

"IPv4Address": "172.18.0.2/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

通过 docker network connect 命令将已有容器连接到新增的网络中,如下例子将 test2 容器也连接到了 my-bridge 中,此时 test2 既在 bridge 网络中也在 my-bridge 中。

[root@docker-node1 ~]# docker network connect my-bridge test2

[root@docker-node1 ~]# docker network inspect my-bridge

[

{

"Name": "my-bridge",

"Id": "d3699cfbd400293e08c6c5a4d2da2f6d7226981b875d092bafd6f7d455f38921",

"Created": "2020-10-19T08:48:42.200451913Z",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.18.0.0/16",

"Gateway": "172.18.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"7c531b6d6cde4bc5578c73867a3667acdc09a2ae49583f69a1a528b5246b1a4f": {

"Name": "test2",

"EndpointID": "1351f55488ce2c3bedbb77c17e0bf41a22c22c16fb0724564d3a2e93f20af38e",

"MacAddress": "02:42:ac:12:00:03",

"IPv4Address": "172.18.0.3/16",

"IPv6Address": ""

},

"b2e3e66cad7dc1d07fa1e85961cc8bab7c74c76b3a41ec405faf437f051c2fc5": {

"Name": "test3",

"EndpointID": "9cf330bc93faf46fa0d869eeffc8bf549b37397c8f231ec323dd0a5bba826ead",

"MacAddress": "02:42:ac:12:00:02",

"IPv4Address": "172.18.0.2/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

此时没有使用 --link 参数连接 test2 和 test3,但是在 test3 中直接 ping test2 也是可以 ping 通的。这是因为连接到自定义 bridge 中所有容器都是可以通过容器名称 ping 通的,而在默认 bridge 中就不行。即 test2 也可以 ping 通 test3,test3 无法 ping 通 test1 。

[root@docker-node1 ~]# docker exec -it test3 /bin/sh

/ # ping test2

PING test2 (172.18.0.3): 56 data bytes

64 bytes from 172.18.0.3: seq=0 ttl=64 time=0.083 ms

^C

--- test2 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.083/0.083/0.083 ms

/ # exit

¶host 和 none 网络

指定 --network 为 none 的容器只有回环端口,不会创建其它端口,所以只能通过 docker exec 的方式进入容器内部访问容器,无法在宿主机内或者外通过网络端口进行访问。

[root@docker-node1 ~]# docker run -d --name test1 --network none busybox /bin/sh -c "while true; do sleep 60; done"

ac33d31a6b32e04e6927cb1d91b21cb6acf83e6df850d404d7845f25249c60c0

[root@docker-node1 ~]# docker network inspect none

[

{

"Name": "none",

"Id": "10ac2277dbd296323001001da2dae51ddee8a8ee8ce4fe76580d83b9bbd6387b",

"Created": "2020-10-19T04:37:58.595608046Z",

"Scope": "local",

"Driver": "null",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": []

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"ac33d31a6b32e04e6927cb1d91b21cb6acf83e6df850d404d7845f25249c60c0": {

"Name": "test1",

"EndpointID": "10851450939da6cf590a81b36255c2b83bd6c93577a10e1d6993cd1659d5c675",

"MacAddress": "",

"IPv4Address": "",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

[root@docker-node1 ~]# docker exec test1 ip a

1: lo: mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

host 网络的容器和宿主机在同一 network namespace,使用同一套网络接口,所以 host 网络的容器很容易产生端口冲突,例如起了两个 host 网络的 nginx 容器的时候,默认端口都是 80 ,此时就冲突了。

[root@docker-node1 flask-redis]# docker run -d --name test2 --network host busybox /bin/sh -c "while true; do sleep 60; done"

4ce2ee17282361b97498ae84276e452561257536fc2b6972bdb7bc1095a23778

[root@docker-node1 flask-redis]# docker inspect host

[

{

"Name": "host",

"Id": "dac2514ae55c0933a9528db1cc07f0dfe1999f0ad9b9a5d284b3b96d1f67fcd2",

"Created": "2020-10-19T04:37:58.605734163Z",

"Scope": "local",

"Driver": "host",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": []

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"4ce2ee17282361b97498ae84276e452561257536fc2b6972bdb7bc1095a23778": {

"Name": "test2",

"EndpointID": "28181afba7b0a479c9820f034c15e52ed47e4459aa3b410aa1955ef225eb2b9c",

"MacAddress": "",

"IPv4Address": "",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

[root@docker-node1 flask-redis]# docker exec test2 ip a

1: lo: mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast qlen 1000

link/ether 52:54:00:4d:77:d3 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 brd 10.0.2.255 scope global dynamic eth0

valid_lft 66842sec preferred_lft 66842sec

inet6 fe80::5054:ff:fe4d:77d3/64 scope link

valid_lft forever preferred_lft forever

3: eth1: mtu 1500 qdisc pfifo_fast qlen 1000

link/ether 08:00:27:e6:45:bc brd ff:ff:ff:ff:ff:ff

inet 192.168.205.10/24 brd 192.168.205.255 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fee6:45bc/64 scope link

valid_lft forever preferred_lft forever

4: docker0: mtu 1500 qdisc noqueue

link/ether 02:42:25:db:49:63 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:25ff:fedb:4963/64 scope link

valid_lft forever preferred_lft forever

34: br-d3699cfbd400: mtu 1500 qdisc noqueue

link/ether 02:42:43:2c:6c:a4 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.1/16 brd 172.18.255.255 scope global br-d3699cfbd400

valid_lft forever preferred_lft forever

inet6 fe80::42:43ff:fe2c:6ca4/64 scope link

valid_lft forever preferred_lft forever

[root@docker-node1 flask-redis]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:4d:77:d3 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 brd 10.0.2.255 scope global noprefixroute dynamic eth0

valid_lft 66841sec preferred_lft 66841sec

inet6 fe80::5054:ff:fe4d:77d3/64 scope link

valid_lft forever preferred_lft forever

3: eth1: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:e6:45:bc brd ff:ff:ff:ff:ff:ff

inet 192.168.205.10/24 brd 192.168.205.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fee6:45bc/64 scope link

valid_lft forever preferred_lft forever

4: docker0: mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:25:db:49:63 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:25ff:fedb:4963/64 scope link

valid_lft forever preferred_lft forever

34: br-d3699cfbd400: mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:43:2c:6c:a4 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.1/16 brd 172.18.255.255 scope global br-d3699cfbd400

valid_lft forever preferred_lft forever

inet6 fe80::42:43ff:fe2c:6ca4/64 scope link

valid_lft forever preferred_lft forever

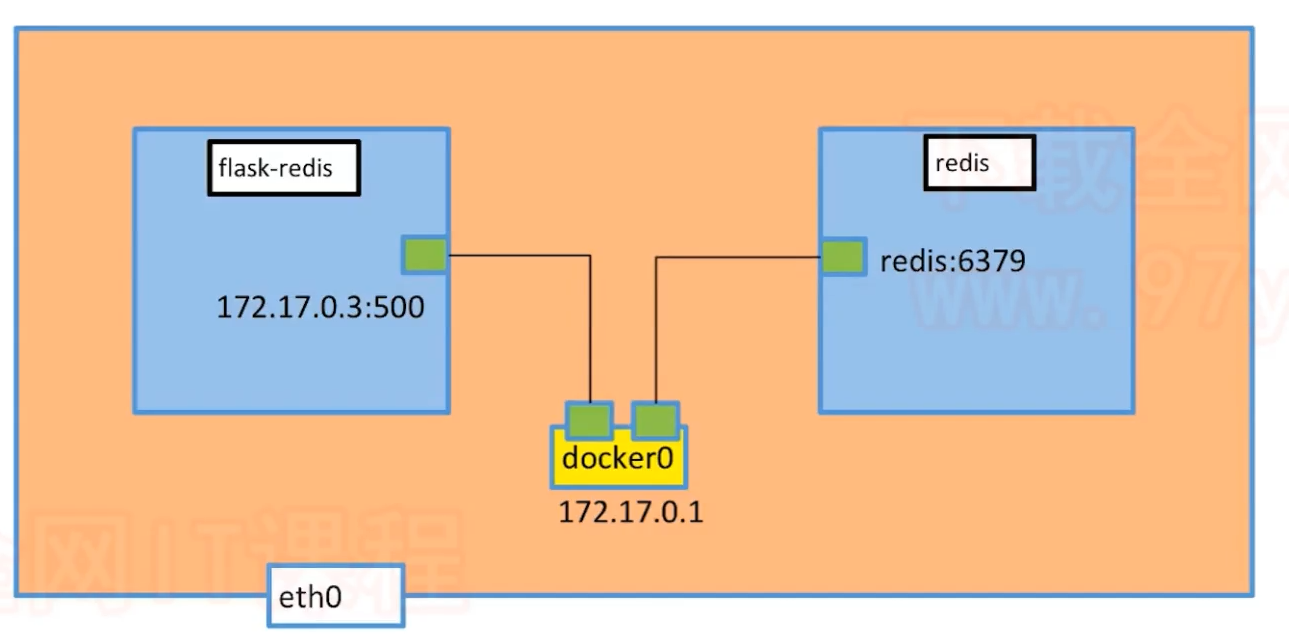

¶overlay:多机通信网络

如下所示,现在在同一个宿主机上有一个 python 的 flask web 程序和 redis 容器,此时它们是可以通信的,网络类型是 bridge。

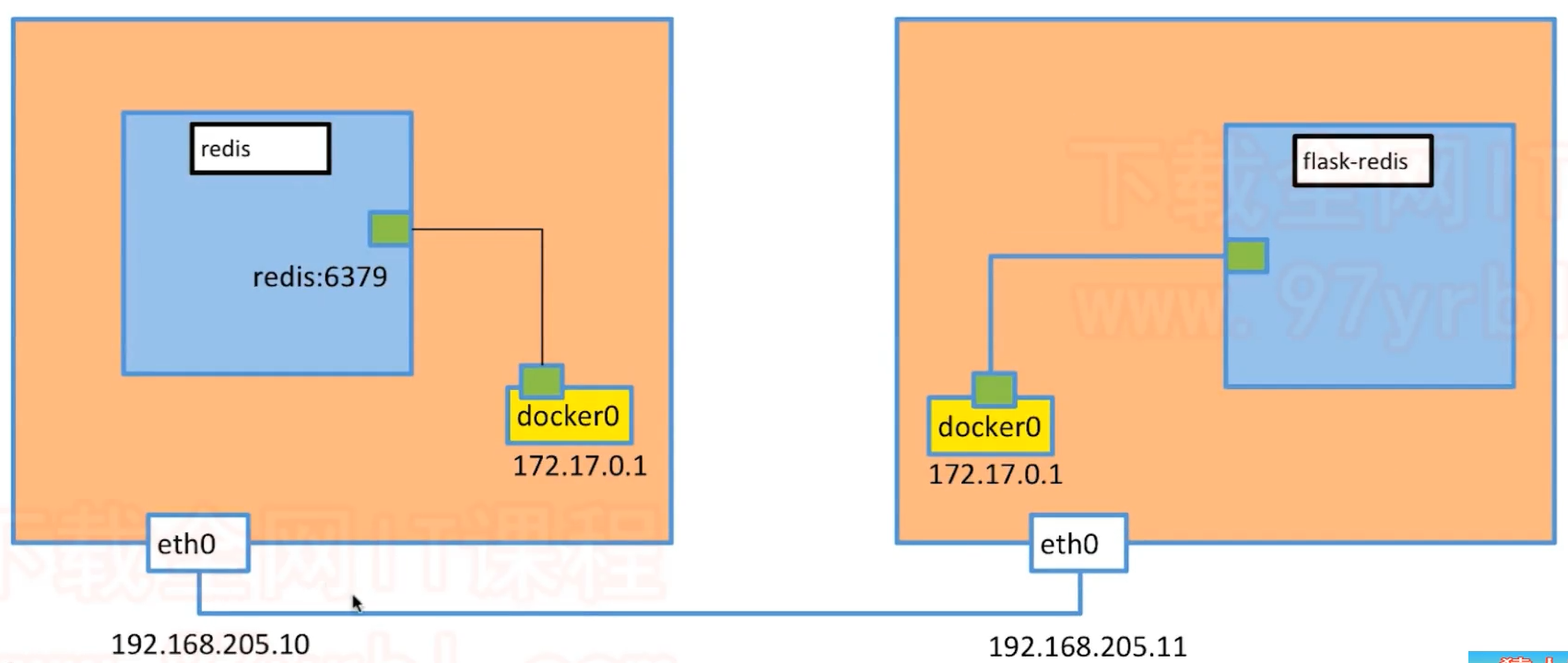

但是如果这两个容器分别位于不同的宿主机上,应该如何通信呢?如下所示,两个容器被分隔在不同的宿主机当前,假设当前宿主机的 local 域就是一个最大的内网域,那么它们发出的报文在当前宿主机的网络栈解析后发现目标地址是一个 local 域的内网地址,还不在当前主机路由表中,此时就会拒绝访问。

这种情况(跨网络的不同内网主机通信)一般是通过隧道的方式进行通信的,即将最原始的数据包封装到一个数据包中,从 192.168.205.10 发送到 192.168.205.11,接收方对数据包解析后得到真正的网络四要素进行处理,处理完后,再对响应报文进行封装回传… …原始数据包称为 underlay,封装后的数据包称为 overlay。docker 基于这种方案实现了多级网络通信。

¶配置 overlay 网络:

¶1>下载 etcd 并启动

docker engine 内部基于 overlay 的实现了分布式网络,使用了分布式存储 etcd 来存储分布式网络中的节点(容器)信息,所以我们要在各个宿主机上安装 etcd 并启动:

在 docker-node1 宿主机上安装 etcd 并启动:

[root@docker-node1 /]# wget https://github.com/etcd-io/etcd/releases/download/v3.3.25/etcd-v3.3.25-linux-amd64.tar.gz

[root@docker-node1 /]# tar -zxf etcd-v3.3.25-linux-amd64.tar.gz

[root@docker-node1 /]# cd etcd-v3.3.25-linux-amd64

[root@docker-node1 etcd-v3.3.25-linux-amd64]# nohup ./etcd --name docker-node1 --initial-advertise-peer-urls http://192.168.205.10:2380 \

> --listen-peer-urls http://192.168.205.10:2380 \

> --listen-client-urls http://192.168.205.10:2379,http://127.0.0.1:2379 \

> --advertise-client-urls http://192.168.205.10:2379 \

> --initial-cluster-token etcd-cluster \

> --initial-cluster docker-node1=http://192.168.205.10:2380,docker-node2=http://192.168.205.11:2380 \

> --initial-cluster-state new&

[1] 32761

[root@docker-node1 etcd-v3.3.25-linux-amd64]# nohup: ignoring input and appending output to 'nohup.out'

在 docker-node2 宿主机上安装 etcd 并启动:

[root@docker-node1 /]# wget https://github.com/etcd-io/etcd/releases/download/v3.3.25/etcd-v3.3.25-linux-amd64.tar.gz

[root@docker-node1 /]# tar -zxf etcd-v3.3.25-linux-amd64.tar.gz

[root@docker-node1 /]# cd etcd-v3.3.25-linux-amd64

[root@docker-node2 etcd-v3.3.25-linux-amd64]# nohup ./etcd --name docker-node2 --initial-advertise-peer-urls http://192.168.205.11:2380 \

> --listen-peer-urls http://192.168.205.11:2380 \

> --listen-client-urls http://192.168.205.11:2379,http://127.0.0.1:2379 \

> --advertise-client-urls http://192.168.205.11:2379 \

> --initial-cluster-token etcd-cluster \

> --initial-cluster docker-node1=http://192.168.205.10:2380,docker-node2=http://192.168.205.11:2380 \

> --initial-cluster-state new&

[1] 29667

[root@docker-node2 etcd-v3.3.25-linux-amd64]# nohup: ignoring input and appending output to 'nohup.out'

在 docker-node1 宿主机上查看 etcd 分布式存储健康状态:

[root@docker-node1 etcd-v3.3.25-linux-amd64]# ./etcdctl cluster-health

member 21eca106efe4caee is healthy: got healthy result from http://192.168.205.10:2379

member 8614974c83d1cc6d is healthy: got healthy result from http://192.168.205.11:2379

cluster is healthy

在 docker-node2 宿主机上查看 etcd 分布式存储健康状态:

[root@docker-node2 etcd-v3.3.25-linux-amd64]# ./etcdctl cluster-health

member 21eca106efe4caee is healthy: got healthy result from http://192.168.205.10:2379

member 8614974c83d1cc6d is healthy: got healthy result from http://192.168.205.11:2379

cluster is healthy

¶2>重新启动 dockerd

etcd 安装好后,重启 dockerd 引擎连接 etcd:

docker-node1主机重启:

[root@docker-node1 etcd-v3.3.25-linux-amd64]# systemctl stop docker

[root@docker-node1 etcd-v3.3.25-linux-amd64]# /usr/bin/dockerd -H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock --cluster-store=etcd://192.168.205.10:2379 --cluster-advertise=192.168.205.10:2375&

docker-node2主机重启:

[root@docker-node2 etcd-v3.3.25-linux-amd64]# systemctl stop docker

[root@docker-node2 etcd-v3.3.25-linux-amd64]# /usr/bin/dockerd -H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock --cluster-store=etcd://192.168.205.11:2379 --cluster-advertise=192.168.205.11:2375&

¶3>创建 overlay 网络

在其中一台宿主机上创建一个名为 demo 的 overlay 类型的网络:

[root@docker-node1 etcd-v3.3.25-linux-amd64]# docker network ls

NETWORK ID NAME DRIVER SCOPE

0209d911ddf0 bridge bridge local

093639f6808b host host local

917d8e6230b0 none null local

[root@docker-node1 etcd-v3.3.25-linux-amd64]# sudo docker network create -d overlay demo

042b9fe688167f5263372dffddaff7cf1712bbf68e2bf5e276af0470707d02bd

[root@docker-node1 etcd-v3.3.25-linux-amd64]# docker network ls

NETWORK ID NAME DRIVER SCOPE

0209d911ddf0 bridge bridge local

042b9fe68816 demo overlay global

093639f6808b host host local

917d8e6230b0 none null local

在另一台宿主机上也能查到这个网络的信息,可以知道两个安装了 etcd 的宿主机的信息是同步的:

[root@docker-node2 etcd-v3.3.25-linux-amd64]# docker network ls

NETWORK ID NAME DRIVER SCOPE

7929f7d4a27a bridge bridge local

042b9fe68816 demo overlay global

58598d4dd274 host host local

5db4751f8c47 none null local

[root@docker-node2 etcd-v3.3.25-linux-amd64]# docker inspect demo

[

{

"Name": "demo",

"Id": "042b9fe688167f5263372dffddaff7cf1712bbf68e2bf5e276af0470707d02bd",

"Created": "2020-10-20T06:19:30.581789942Z",

"Scope": "global",

"Driver": "overlay",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "10.0.0.0/24",

"Gateway": "10.0.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

而同步信息就是基于 etcd 来实现的,可以直接通过 etcdctl 工具查看具体存储的内容:

[root@docker-node2 etcd-v3.3.25-linux-amd64]# ./etcdctl ls /docker/nodes

/docker/nodes/192.168.205.10:2375

/docker/nodes/192.168.205.11:2375

[root@docker-node2 etcd-v3.3.25-linux-amd64]# ./etcdctl ls /docker/network/v1.0/network

/docker/network/v1.0/network/042b9fe688167f5263372dffddaff7cf1712bbf68e2bf5e276af0470707d02bd

在 node1 宿主机 run 一个 demo overlay 网络的名为 test1 的容器:

[root@docker-node1 etcd-v3.3.25-linux-amd64]# docker run -d --name test1 --network demo busybox /bin/sh -c "while true;do sleep 60;done"

83bf9479fbabce2fa3297fbe469749cefec0ff6586dc5ac919ddebcab5bc96a3

此时再在 node2 宿主机上 run 一个同网络同名的容器会报已存在:

[root@docker-node2 etcd-v3.3.25-linux-amd64]# docker run -d --name test1 --network demo busybox /bin/sh -c "while true;do sleep 60;done"

docker: Error response from daemon: endpoint with name test1 already exists in network demo.

然后 run 一个 demo overlay 网络的名为 test2 的容器,查看 demo 中包含了 test1 和 test2 的两个容器节点,ip 分别为 10.0.0.2 和 10.0.0.3

[root@docker-node2 etcd-v3.3.25-linux-amd64]# docker run -d --name test2 --network demo busybox /bin/sh -c "while true;do sleep 60;done"

7749fd634491a1bf7636cb693466b40bf04ea3559cd00784e898ffa24fac32ed

[root@docker-node2 etcd-v3.3.25-linux-amd64]# docker inspect demo

[

{

"Name": "demo",

"Id": "042b9fe688167f5263372dffddaff7cf1712bbf68e2bf5e276af0470707d02bd",

"Created": "2020-10-20T06:19:30.581789942Z",

"Scope": "global",

"Driver": "overlay",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "10.0.0.0/24",

"Gateway": "10.0.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"7749fd634491a1bf7636cb693466b40bf04ea3559cd00784e898ffa24fac32ed": {

"Name": "test2",

"EndpointID": "a61eb9838177fbedee02ad926480ac565bf4c43b29a2e8c56aa8de4bd9c1ebf1",

"MacAddress": "02:42:0a:00:00:03",

"IPv4Address": "10.0.0.3/24",

"IPv6Address": ""

},

"ep-2fc584506d058dd7d41dac1dcda9dd153abe8ff799a1e51edc9ec791e9ac757d": {

"Name": "test1",

"EndpointID": "2fc584506d058dd7d41dac1dcda9dd153abe8ff799a1e51edc9ec791e9ac757d",

"MacAddress": "02:42:0a:00:00:02",

"IPv4Address": "10.0.0.2/24",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

这时候两个容器就可以互通了:

[root@docker-node2 etcd-v3.3.25-linux-amd64]# docker exec test2 ping test1

PING test1 (10.0.0.2): 56 data bytes

64 bytes from 10.0.0.2: seq=0 ttl=64 time=0.860 ms

64 bytes from 10.0.0.2: seq=1 ttl=64 time=1.102 ms

另外,我们再以 test2 容器为例,可以看到它的网络接口不只一个 10.0.0.3,还有一个 172.18.0.2,而 node2 宿主机的 docker 网络也增加了一个名为 docker_gwbridge 的 bridge 网络,通过 ip a 可以看到在宿主机上 docker_gwbridge 网桥的 ip 地址,而 15 号 vethf2fc083@if14 设备就是和 test2 的 14 号设备 eth1@if15 是一对 Veth。即在创建一个 overlay 网络的容器的时候,每个容器都创建了两个 Veth 设备,一个连接到宿主机的单独创建的网桥上,和宿主机进行通信,一个连接到他自己创建的一个虚拟设备上 。

[root@docker-node2 etcd-v3.3.25-linux-amd64]# docker exec test2 ip a

1: lo: mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

11: eth0@if12: mtu 1450 qdisc noqueue

link/ether 02:42:0a:00:00:03 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.3/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

14: eth1@if15: mtu 1500 qdisc noqueue

link/ether 02:42:ac:12:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.2/16 brd 172.18.255.255 scope global eth1

valid_lft forever preferred_lft forever

[root@docker-node2 etcd-v3.3.25-linux-amd64]# docker network ls

NETWORK ID NAME DRIVER SCOPE

7929f7d4a27a bridge bridge local

042b9fe68816 demo overlay global

f780bd743e0a docker_gwbridge bridge local

58598d4dd274 host host local

5db4751f8c47 none null local

[root@docker-node2 etcd-v3.3.25-linux-amd64]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:4d:77:d3 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 brd 10.0.2.255 scope global noprefixroute dynamic eth0

valid_lft 75314sec preferred_lft 75314sec

inet6 fe80::5054:ff:fe4d:77d3/64 scope link

valid_lft forever preferred_lft forever

3: eth1: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:45:8b:91 brd ff:ff:ff:ff:ff:ff

inet 192.168.205.11/24 brd 192.168.205.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fe45:8b91/64 scope link

valid_lft forever preferred_lft forever

4: docker0: mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:57:e6:8b:12 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:57ff:fee6:8b12/64 scope link

valid_lft forever preferred_lft forever

13: docker_gwbridge: mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:2b:b0:6c:74 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.1/16 brd 172.18.255.255 scope global docker_gwbridge

valid_lft forever preferred_lft forever

inet6 fe80::42:2bff:feb0:6c74/64 scope link

valid_lft forever preferred_lft forever

15: vethf2fc083@if14: mtu 1500 qdisc noqueue master docker_gwbridge state UP group default

link/ether 62:e4:1c:73:ba:0a brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::60e4:1cff:fe73:ba0a/64 scope link

valid_lft forever preferred_lft forever

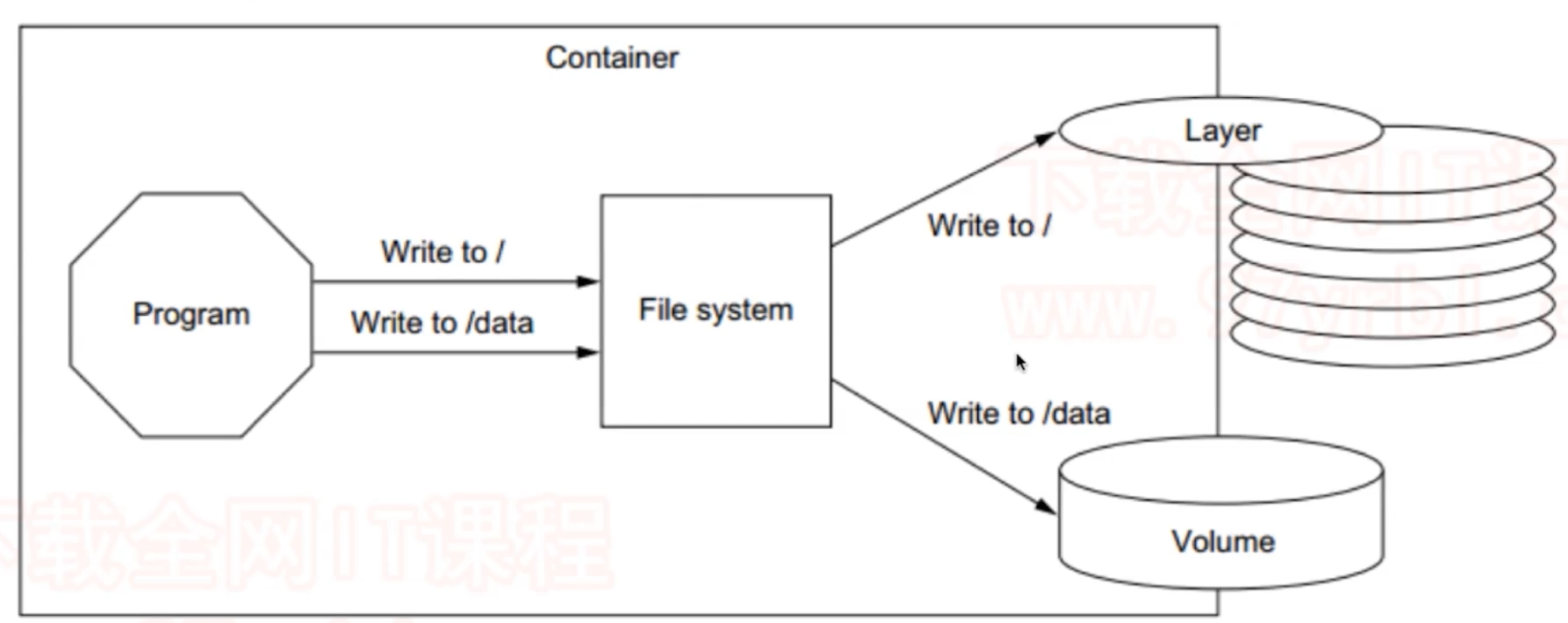

Docker 持久化存储

写入容器的读写层的数据在容器删除后就没有了。

通过一个特殊的方式将宿主机的一块磁盘 mount 到容器上,实现数据持久化

¶Docker 持久化数据方案

-

基于本地文件的 Volume。可以在执行 Docker create 或 Docker run 时,通过

-v参数将主机的目录作为容器的数据卷。这部分功能便是基于本地文件系统的 volume 管理。-

Data Mounting:受管理的 data Volume,由 docker 后台自动创建,mount 到宿主机的随机路径

以 MySQL 官方提供的 Dockerfile 为例,以 VOLUME 指令指定了

/var/lib/mysql为容器的 volume,指的是容器中的/var/lib/mysql路径会 mount 到宿主机中的一个路径。我们先 run 一个 MySQL 容器:[root@docker-host ~]# sudo docker run -d --name mysql1 -e MYSQL_ALLOW_EMPTY_PASSWORD=true mysql Unable to find image 'mysql:latest' locally latest: Pulling from library/mysql bb79b6b2107f: Pull complete 49e22f6fb9f7: Pull complete 842b1255668c: Pull complete 9f48d1f43000: Pull complete c693f0615bce: Pull complete 8a621b9dbed2: Pull complete 0807d32aef13: Pull complete 9eb4355ba450: Pull complete 6879faad3b6c: Pull complete 164ef92f3887: Pull complete 6e4a6e666228: Pull complete d45dea7731ad: Pull complete Digest: sha256:86b7c83e24c824163927db1016d5ab153a9a04358951be8b236171286e3289a4 Status: Downloaded newer image for mysql:latest 27e7c2dc9827d3dc485a97e36b71384abea77e634fc424f08b43865f52c989e3 [root@docker-host ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 27e7c2dc9827 mysql "docker-entrypoint.s…" 12 seconds ago Up 11 seconds 3306/tcp, 33060/tcp mysql1查看 docker 的 volume 可以看到没有通过

-v参数指定 volume 的时候,volume 的名称是一个随机 ID,每创建一个 mysql 容器就会新增一个 volume,此时我们停止删除容器后 volume 还在;通过docker volume inspect可以查看 volume mount 到了宿主机的哪一个路径;通过docker volume rm删除 volume:[root@docker-host ~]# docker volume ls DRIVER VOLUME NAME local d8d22755a81cef64966748478352b3fc807f013f90d44ced2b586bc3850007ed [root@docker-host ~]# docker volume inspect d8d22755a81cef64966748478352b3fc807f013f90d44ced2b586bc3850007ed [ { "CreatedAt": "2020-10-20T07:26:05Z", "Driver": "local", "Labels": null, "Mountpoint": "/var/lib/docker/volumes/d8d22755a81cef64966748478352b3fc807f013f90d44ced2b586bc3850007ed/_data", "Name": "d8d22755a81cef64966748478352b3fc807f013f90d44ced2b586bc3850007ed", "Options": null, "Scope": "local" } ] [root@docker-host ~]# sudo docker run -d --name mysql2 -e MYSQL_ALLOW_EMPTY_PASSWORD=true mysql 6550c8cdc4206c561107afd628b9b107fc88ea5aa562ef40fd7e86d25febc160 [root@docker-host ~]# docker volume ls DRIVER VOLUME NAME local d8d22755a81cef64966748478352b3fc807f013f90d44ced2b586bc3850007ed local fec2daa7b61f5eef6ccc130fb76191374d65c18844d547afd384a69e7868b368 [root@docker-host ~]# docker volume inspect fec2daa7b61f5eef6ccc130fb76191374d65c18844d547afd384a69e7868b368 [ { "CreatedAt": "2020-10-20T07:27:24Z", "Driver": "local", "Labels": null, "Mountpoint": "/var/lib/docker/volumes/fec2daa7b61f5eef6ccc130fb76191374d65c18844d547afd384a69e7868b368/_data", "Name": "fec2daa7b61f5eef6ccc130fb76191374d65c18844d547afd384a69e7868b368", "Options": null, "Scope": "local" } ] [root@docker-host ~]# docker stop mysql1 mysql2 mysql1 mysql2 [root@docker-host ~]# docker rm mysql1 mysql2 mysql1 mysql2 [root@docker-host ~]# docker volume ls DRIVER VOLUME NAME local d8d22755a81cef64966748478352b3fc807f013f90d44ced2b586bc3850007ed local fec2daa7b61f5eef6ccc130fb76191374d65c18844d547afd384a69e7868b368 [root@docker-host ~]# docker volume rm d8d22755a81cef64966748478352b3fc807f013f90d44ced2b586bc3850007ed fec2daa7b61f5eef6ccc130fb76191374d65c18844d547afd384a69e7868b368 d8d22755a81cef64966748478352b3fc807f013f90d44ced2b586bc3850007ed fec2daa7b61f5eef6ccc130fb76191374d65c18844d547afd384a69e7868b368我们通过

-v参数指定 volume 的名称为 mysql,此时就可以实现 volume 的复用,删除 mysql1 容器后,新运行的 mysql2 可以基于 mysql1 的volume 继续运行,数据不会丢失,例如我们在 mysql1 中创建的数据库 docker 可以在 mysql2 中看到:[root@docker-host ~]# docker run -d -v mysql:/var/lib/mysql --name mysql1 -e MYSQL_ALLOW_EMPTY_PASSWORD=true mysql 0b02325ec880a7c37dfb482e12279bd4a9ef36d52622489cb024d7972cee12ee [root@docker-host ~]# docker volume ls DRIVER VOLUME NAME local mysql [root@docker-host ~]# docker exec -it mysql1 /bin/sh # exit [root@docker-host ~]# clear [root@docker-host ~]# docker exec -it mysql1 /bin/bash root@0b02325ec880:/# mysql -uroot Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 8 Server version: 8.0.21 MySQL Community Server - GPL Copyright (c) 2000, 2020, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | mysql | | performance_schema | | sys | +--------------------+ 4 rows in set (0.02 sec) mysql> create database docker; Query OK, 1 row affected (0.01 sec) mysql> exit Bye root@0b02325ec880:/# exit exit [root@docker-host ~]# docker rm -f mysql1 mysql1 [root@docker-host ~]# docker volume ls DRIVER VOLUME NAME local mysql [root@docker-host ~]# docker run -d -v mysql:/var/lib/mysql --name mysql2 -e MYSQL_ALLOW_EMPTY_PASSWORD=true mysql 1c2ae7fc37ce41e8f40ac8e46c1926ad8a22fa79c92d42b953c147e1e4606aae [root@docker-host ~]# docker exec -it mysql2 /bin/bash root@1c2ae7fc37ce:/# mysql -uroot Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 8 Server version: 8.0.21 MySQL Community Server - GPL Copyright (c) 2000, 2020, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> show databases; +--------------------+ | Database | +--------------------+ | docker | | information_schema | | mysql | | performance_schema | | sys | +--------------------+ 5 rows in set (0.01 sec) mysql> exit Bye -

Bind Mouting:绑定挂载的 Volume,具体挂载位置可以由用户指定,即 mount 到用户指定路径。

-v参数有三个字段,字段之间由":"冒号分隔- 第一个字段表示 mount 到宿主机中的路径,如果以"/"斜杠开头,则表示绝对路径,此时就是 Bind Mouting 模式,宿主机中的 mount 路径由用户指定;如果不以斜杠开头或者省略为空,此时表示相对路径,如果省略没写,docker就会生成一个随机的ID作为该相对路径,此时就是上面介绍的 Data Mounting 模式。记相对路径为

${related_path},此时会 Mount 到宿主机的var/lib/docker/volumes/${related_path}上,并以${related_path}作为该 volume 的命名。 - 第二个字段表示容器中要 mount 的路径

- 第三个字段表示容器中该路径的操作权限,如只读

Data Mounting 主要是容器中要产生数据,为了保证数据不随着容器删除而丢失使用的;Bind Mounting 则是宿主机也会产生数据,例如源代码等,方便直接在宿主机中进行修改。

- 第一个字段表示 mount 到宿主机中的路径,如果以"/"斜杠开头,则表示绝对路径,此时就是 Bind Mouting 模式,宿主机中的 mount 路径由用户指定;如果不以斜杠开头或者省略为空,此时表示相对路径,如果省略没写,docker就会生成一个随机的ID作为该相对路径,此时就是上面介绍的 Data Mounting 模式。记相对路径为

-

-

基于 plugin 的 Volume,支持第三方的存储方案,比如 NAS,aws。

Docker Compose

- Docker Compose 是一个工具

- 这个工具可以通过一个 yml 文件定义多容器的 docker 应用

- 通过一条命令就可以根据 yml 文件的定义去创建或者管理这多个容器\

- version2 格式的文件只能通过 docker-compose 部署在一个主机;version3 格式的文件可以通过 docker stack 命令通过 swarm 架构部署多机网络

yml 文件默认名字 docker-compose.yml,可以改。它有三大关键概念:

-

Services

-

一个 service 代表一个 container,这个 container 可以用从 dockerhub 的 image 来创建,或者从本地的 Dockerfile build 出来的 image 来创建

-

Service 的启动类似 docker run,我们可以给其指定 network 和 volume,所以可以给 service 指定 network 和 volume 的引用

-

例子:

-

下面是一个名为 db 的 service,image 表示从 docker hub 拉取镜像

services: db: image: postgres:9.4 volume: - "db-data:/var/lib/postgresql/data" # 引用一个名为 db-data 的 volume networks: - back-tier # 引用一个名为 back-tier 的network类似

docker run -d --network back-tier -v db-data:/var/lib/postgresql/data postgres:9.4 -

services 包含一个 worker 的service,build 指定 Dockerfile 所在目录,表示要自己 build 镜像,不去 docker hub 拉取。另外,还有一个 links,表示的就是

--link参数services: worker: build: ./worker links: - db - redis networks: - back-tier

-

-

-

Networks

volumes: db-data:类似

docker volume create db-data -

Volumes

networks: front-tier: driver: bridge back-tier: driver: bridge类似

docker network create -d bridge front-tier docker network create -d bridge back-tier

¶整体例子

下面是一个启动 wordpress 博客服务的 docker compose yml 文件

version: '3' # 指定 version 3 的 docker compose 格式

services:

wordpress:

image: wordpress

ports: # 指定端口映射,-p 参数

- 8080:80

environment: # 设置环境变量 -e 参数

WORDPRESS_DB_HOST: mysql

WORDPRESS_DB_PASSWORD: root

networks: # 引用网络

- my-bridge

mysql:

image: mysql

environment:

MYSQL_ROOT_PASSWORD: root

MYSQL_DATABASE: wordpress

volumes:

- mysql-data:/var/lib/mysql

networks:

- my-bridge

volumes:

mysql-data:

networks:

my-bridge:

driver: bridge # 网络类型

它的作用类似于以下 docker 命令,不过它们的网络构建方式不一样,docker compose 文件里面使用了自定义 bridge 网络,下面的 docker 命令使用了 --link:

[root@docker-host docker-nginx]# docker run -d --name mysql -v mysql-data:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=root -e MYSQL_DATABASE=wordpress mysql

4a37f84fe3e870df8e3959c00f118ca6b858f7aa820b56fb3e9410add47240e3

[root@docker-host docker-nginx]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4a37f84fe3e8 mysql "docker-entrypoint.s…" 8 seconds ago Up 7 seconds 3306/tcp, 33060/tcp mysql

[root@docker-host docker-nginx]# docker run -d -e WORDPRESS_DB_HOST=mysql:3306 --link mysql -p 8080:80 wordpress

1eb7f0c302c76ff38e645f48c0ab8633d16ccb839bd6c0095a72b75f957c4411

[root@docker-host docker-nginx]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

1eb7f0c302c7 wordpress "docker-entrypoint.s…" 4 seconds ago Up 4 seconds 0.0.0.0:8080->80/tcp confident_chaplygin

4a37f84fe3e8 mysql "docker-entrypoint.s…" 5 minutes ago Up 5 minutes 3306/tcp, 33060/tcp mysql

¶命令

需要注意的是 docker compose 指定必须基于一个 yml 文件执行,也就是说如果没有通过 -f 指定 yml 文件,就必须 cd 到 yml 文件所在目录执行 docker-compose 命令;另外 docker-compose 创建的容器的名称是会拼接一定前缀保证唯一的,所以使用 docker-compose 直接对 yml 文件中定义的名称进行操作是可以的,但是使用 docker 的命令就不行了,此时需要完全名称。

-

docker-compose up执行该 yml 文件-d后台运行,不输出日志到终端--scale <service-name>=<run-num-of-container>来运行指定数量的指定 service 容器,相当于水平扩展,需要注意该 service 的 yml 定义中是否硬编码绑定到了宿主机中的同一端口,会产生冲突。docker-compose的scale只能在单机上做水平扩展。

-

docker-compose ps列出指定容器状态[root@docker-host wordpress]# docker-compose ps Name Command State Ports ------------------------------------------------------------------------------------- wordpress_mysql_1 docker-entrypoint.sh mysqld Up 3306/tcp, 33060/tcp wordpress_wordpress_1 docker-entrypoint.sh apach ... Up 0.0.0.0:8080->80/tcp -

docker-compose stop停止 -

docker-compose down停止并删除容器、网络、volume等,不删除 image -

docker-compose start启动 -

docker-compose images列举出 yml 文件中容器用到的 images -

docker-compose exec和 docker 的 exec 基本一致 -

docker-compose build拉取和 build services 需要的镜像;当镜像更新的时候也要使用这个命令然后再docker-compose up。

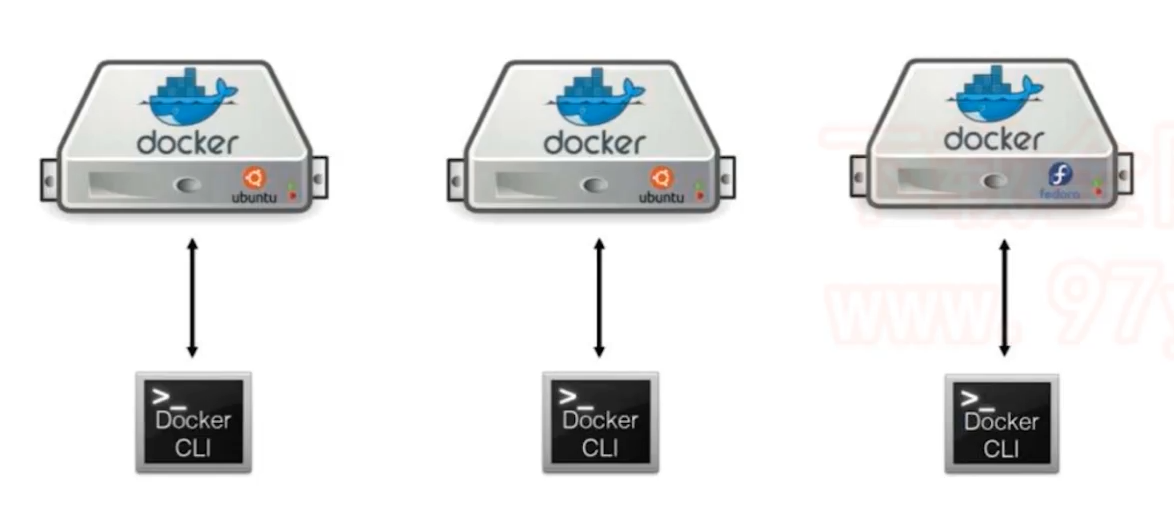

Docker Swarm

在单机情况下使用Docker run和Docker-compose:

此时会遇到以下问题:

- 如何管理这么容器?

- 怎么能方便地横向扩展?

- 如果容器down了,怎么能自动恢复?

- 如何去更新容器而不影响业务?

- 如何去监控追踪这些容器?

- 怎么去调度容器的创建?

- 如何保护隐私数据?

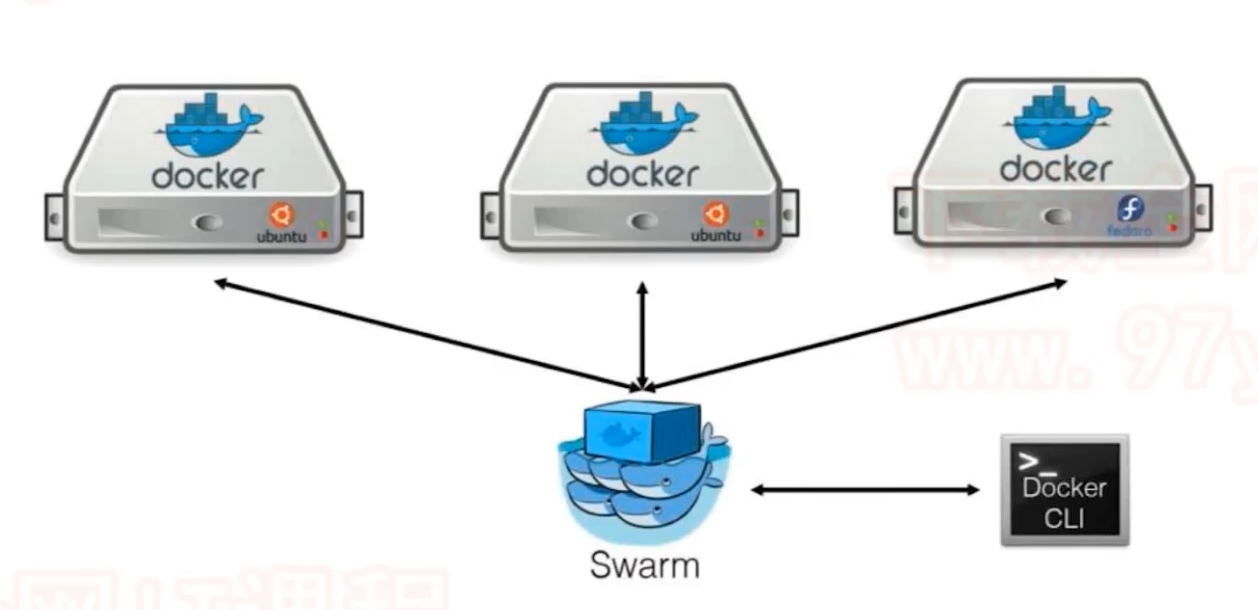

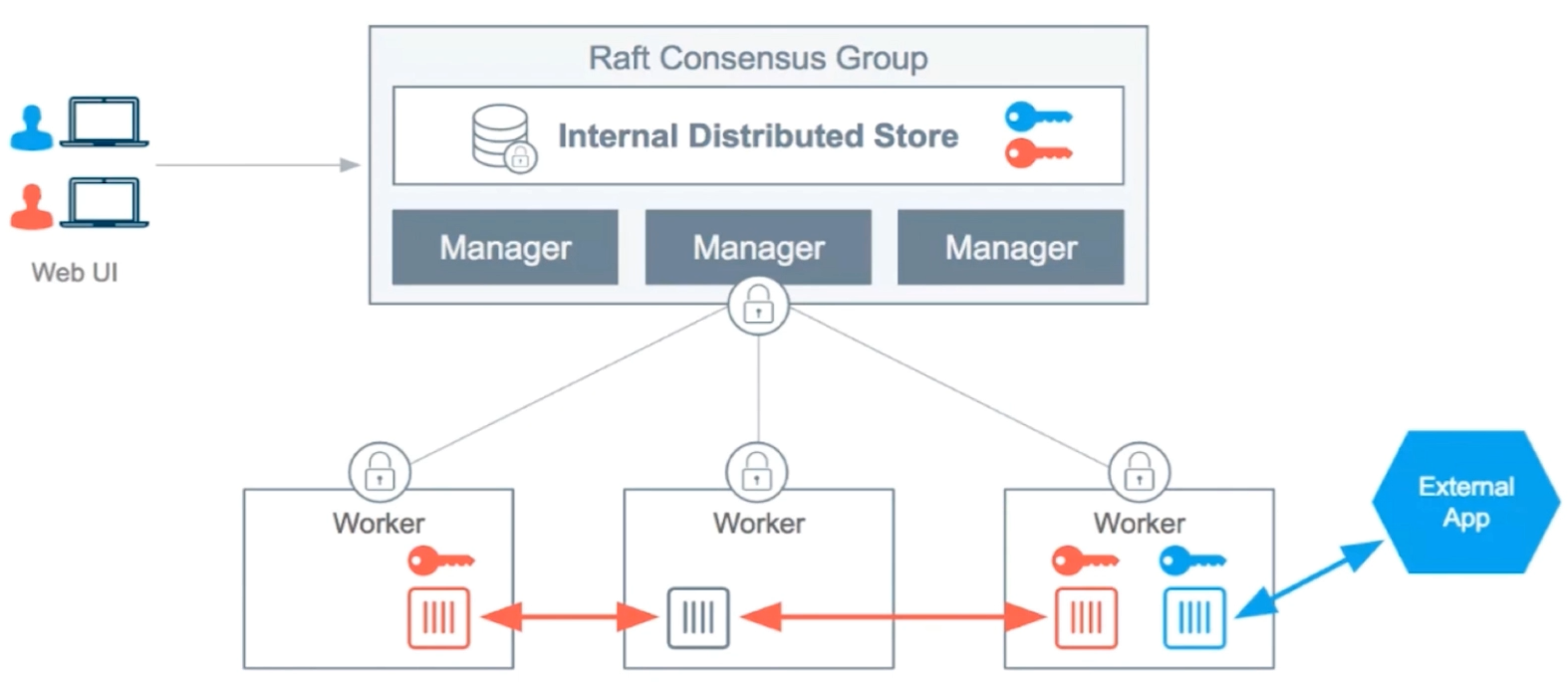

¶SWARM 模式

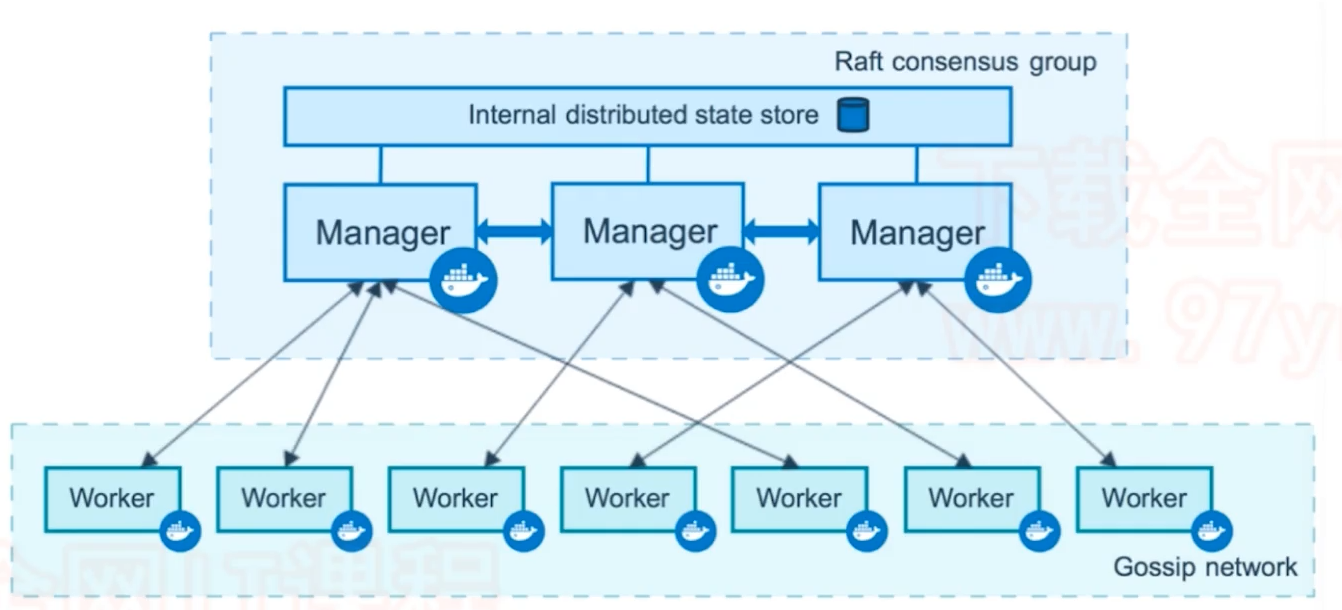

¶架构图

- Manager 节点至少有两个,内置分布式存储数据库,通过 Raft 协议同步数据,不会出现脑裂。

- Worker 节点是实际工作节点,通过 Gossip Work 同步数据。

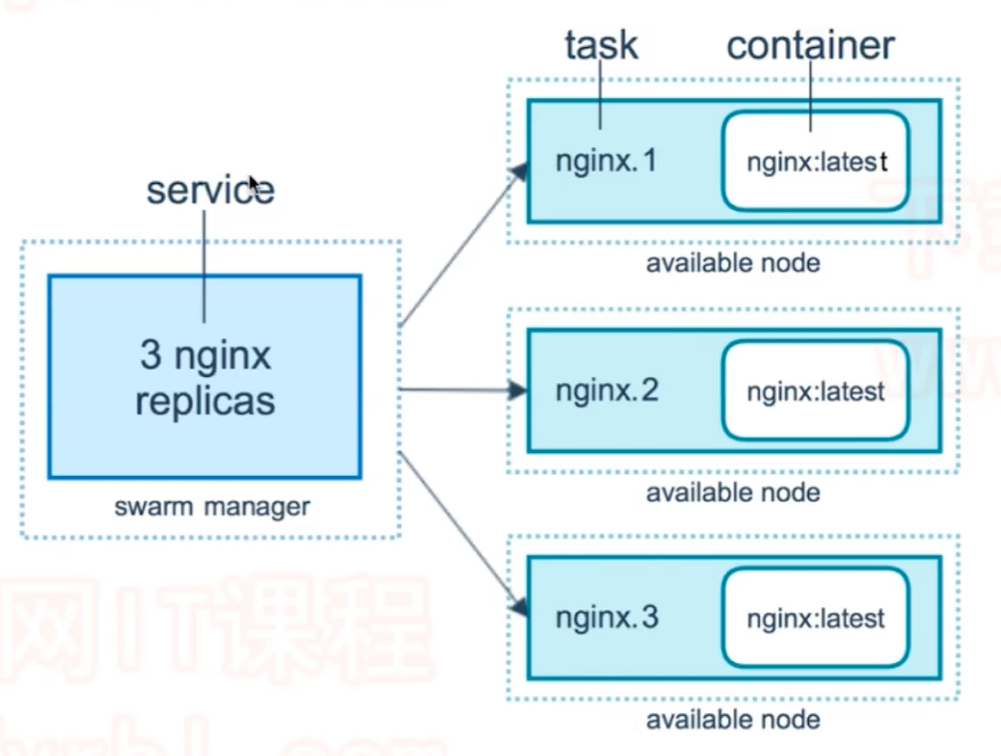

¶service 和 replica

一个服务可以创建多个副本进行水平横向扩展,根据实际资源调度到不同的 Worker 节点中运行。

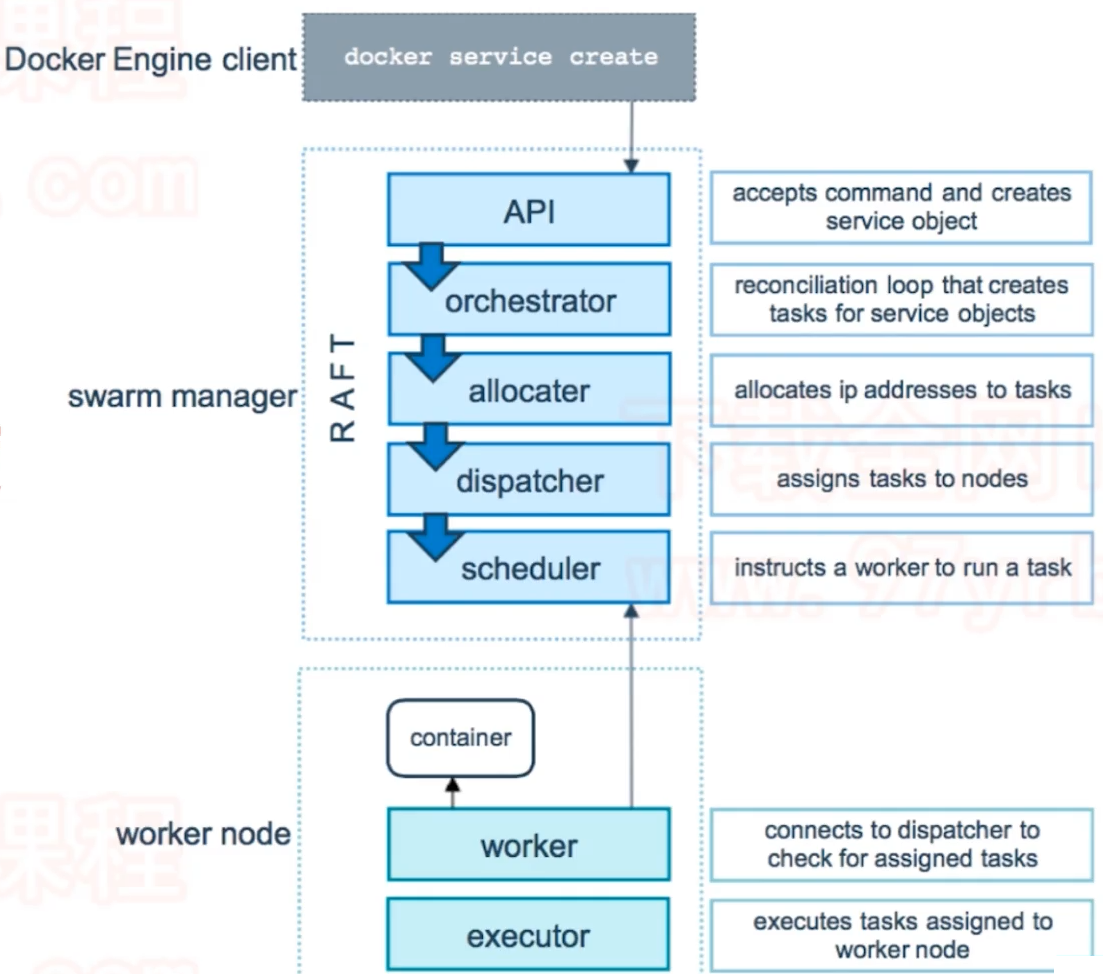

¶执行流程

¶使用

¶命令

docker

swam:操作 swarm 集群init:初始化一个 swam 集群,当前节点称为一个 manager 节点--advertise-addr <addr_string>:向别的 swam 节点公告自己的地址,以这个地址为自己的沟通方式

join:当前节点作为 worker 节点加入一个 swam 集群--token <token_string>:指定要加入集群的 token

node:查看 swam 集群信息ls:查看 swarm 集群中的所有节点,只能在 manager 节点运行

service:service 相关操作create:创建一个 service,不一定运行在本地,根据实际调度ls:查看所有 services 的概览信息ps <container-name-or-id>:查看指定 service 的详细信息,包括运行在哪个节点scale <service-name>=<replicas-num> [<service-name>=<replicas-num>...]:可以对多个服务进行横向扩展,指定它们的副本数量,默认副本数量就是 1,即不会扩展。scale可以自动恢复。rm <service-name> [<service-name>...]:删除指定 service。

¶创建一个3个节点的集群

在 swarm-manager 主机上初始化一个 swarm 集群

[root@swarm-manager ~]# docker swarm init --advertise-addr=192.168.205.10

Swarm initialized: current node (16le9my05jir8fjagatlcxx2c) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-5ftx7ja9b2c20rhkheu2dnms06j4t646rkiwjbkcns1hhwa4rk-6l0kapgwbsea66pih6cvxmu3k 192.168.205.10:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

分别在 swarm-worker1 和 swarm-worker2 上作为 worker 节点加入到集群

[root@swarm-worker1 ~]# docker swarm join --token SWMTKN-1-5ftx7ja9b2c20rhkheu2dnms06j4t646rkiwjbkcns1hhwa4rk-6l0kapgwbsea66pih6cvxmu3k 192.168.205.10:2377

This node joined a swarm as a worker.

[root@swarm-worker2 ~]# docker swarm join --token SWMTKN-1-5ftx7ja9b2c20rhkheu2dnms06j4t646rkiwjbkcns1hhwa4rk-6l0kapgwbsea66pih6cvxmu3k 192.168.205.10:2377

This node joined a swarm as a worker.

回到 swarm-manager 主机上查看集群节点

[root@swarm-manager ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

16le9my05jir8fjagatlcxx2c * swarm-manager Ready Active Leader 19.03.13

p9026oe5ws78l7vpoj2b342q0 swarm-worker1 Ready Active 19.03.13

3zilh3e4keprghwftsv3g7doi swarm-worker2 Ready Active 19.03.13

¶在 manager 上创建 service

通过docker service create创建一个 service,通过 docker service ps 命令看到这个 service 运行在当前 manager 节点上,通过 docker ps 看到创建的容器名称并不是我们指定的 service 名称,而是做了一些拼接保证唯一。docker service ls可以看到这个节点的模式是可扩展的,副本只有一份,也就是还没有扩展。

[root@swarm-manager ~]# docker service create --name demo busybox sh -c "while true;do sleep 60;done"

vtfjx1iw3mdfwdwakqs5jyxep

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged

[root@swarm-manager ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

vtfjx1iw3mdf demo replicated 1/1 busybox:latest

[root@swarm-manager ~]# docker service ps demo

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

9oaa1o7f0fb5 demo.1 busybox:latest swarm-manager Running Running about a minute ago

[root@swarm-manager ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

050d6438f24d busybox:latest "sh -c 'while true;d…" 2 minutes ago Up 2 minutes demo.1.9oaa1o7f0fb5l1mjmovv6fxyd

¶动态横向扩展

上面已经运行了一个 service,再次通过 docker service scale 命令指定副本数量为5,即再扩展4个service。docker service ls的 REPLICAS 的分子表示 ready 的副本数量,分母表示 scale 指令指定的副本数量:

[root@swarm-manager ~]# docker service scale demo=5

demo scaled to 5

overall progress: 5 out of 5 tasks

1/5: running [==================================================>]

2/5: running [==================================================>]

3/5: running [==================================================>]

4/5: running [==================================================>]

5/5: running [==================================================>]

verify: Service converged

[root@swarm-manager ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

vtfjx1iw3mdf demo replicated 5/5 busybox:latest

[root@swarm-manager ~]# docker service ps demo

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

9oaa1o7f0fb5 demo.1 busybox:latest swarm-manager Running Running 10 minutes ago

kfevbmud5rlo demo.2 busybox:latest swarm-worker2 Running Running 2 minutes ago

0c9ol7s99ajw demo.3 busybox:latest swarm-worker2 Running Running 2 minutes ago

id6r217b847s demo.4 busybox:latest swarm-manager Running Running 2 minutes ago

z3nk7cethkao demo.5 busybox:latest swarm-worker1 Running Running 2 minutes ago

¶自动恢复

先在随意一个节点删除一个 service:

[root@swarm-worker2 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9c21a650f557 busybox:latest "sh -c 'while true;d…" 5 minutes ago Up 5 minutes demo.3.0c9ol7s99ajwc80uz224lwew8

4938879da7fe busybox:latest "sh -c 'while true;d…" 5 minutes ago Up 5 minutes demo.2.kfevbmud5rlotwmn7xmdk1q2a

[root@swarm-worker2 ~]# docker rm -f 4938879da7fe

4938879da7fe

可以看到 REPLICAS 的 ready 数量减 1:

[root@swarm-manager ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

vtfjx1iw3mdf demo replicated 4/5 busybox:latest

再次查看的时候,发现恢复成 5 了

[root@swarm-manager ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

vtfjx1iw3mdf demo replicated 5/5 busybox:latest

¶swarm 模式的多机网络

先使用 docker network create 创建一个 overlay 网络

[root@swarm-manager ~]# docker network create -d overlay demo

88eid380la4nk4cbm4j6fg7ji

[root@swarm-manager ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

73fb8708e978 bridge bridge local

88eid380la4n demo overlay swarm

7c337a10f364 docker_gwbridge bridge local

7e166669d3c0 host host local

id0v0fnkkaz6 ingress overlay swarm

1f203646282a none null local

此时在其它节点上还没能看到这个网络:

[root@swarm-worker2 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

fa22d819b3a6 bridge bridge local

1852f7d5af51 docker_gwbridge bridge local

bebcfcafd9b1 host host local

id0v0fnkkaz6 ingress overlay swarm

c47aacb76a84 none null local

在 manager 上启动 mysql service 和 wordpress service,其中可以看到 wordpress service 被分配到了 worker2 主机上:

[root@swarm-manager ~]# docker service create --name mysql --env MYSQL_ROOT_PASSWORD=root --env MYSQL_DATABASE=wordpress --network demo --mount type=volume,source=mysql,destination=/var/lib/mysql mysql[root@swarm-manager ~]# docker service create --name mysql --env MYSQL_ROOT_PASSWORD=root --env MYSQL_DATABASE=wordpress --network demo --mount type=volume,source=mysql,destination=/var/lib/mysql mysql

9iq09p6da4vbmeu1mvdmzrl2n

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged

[root@swarm-manager ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

9iq09p6da4vb mysql replicated 1/1 mysql:latest

[root@swarm-manager ~]# docker service create --name wordpress -p 80:80 --env WORDPRESS_DB_PASSWORD=root --env WORDPRESS_DB_HOST=mysql --network demo wordpress

lm7s3n6oaw47nt46ge13mt5g3

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged

[root@swarm-manager ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

9iq09p6da4vb mysql replicated 1/1 mysql:latest

lm7s3n6oaw47 wordpress replicated 1/1 wordpress:latest *:80->80/tcp

[root@swarm-manager ~]# docker service ps wordpress

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

b5n9cnap232j wordpress.1 wordpress:latest swarm-worker2 Running Running about a minute ago

此时到 worker2 节点上查看网络已经可以看到 demo overlay 网络:

[root@swarm-worker2 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

fa22d819b3a6 bridge bridge local

88eid380la4n demo overlay swarm

1852f7d5af51 docker_gwbridge bridge local

bebcfcafd9b1 host host local

id0v0fnkkaz6 ingress overlay swarm

c47aacb76a84 none null local

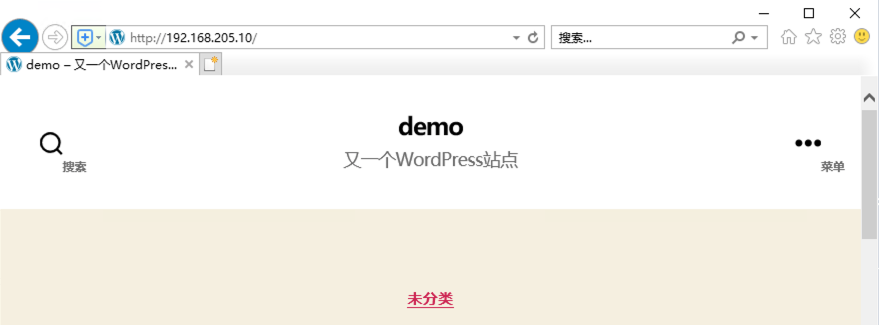

所以在 swarm 模式下实现多机通信网络已经不需要额外的分布式存储 etcd 来实现了。此外,wordpress 容器运行在 worker2 节点上,但是实际上三个节点的 ip 地址都能访问:

¶网络说明

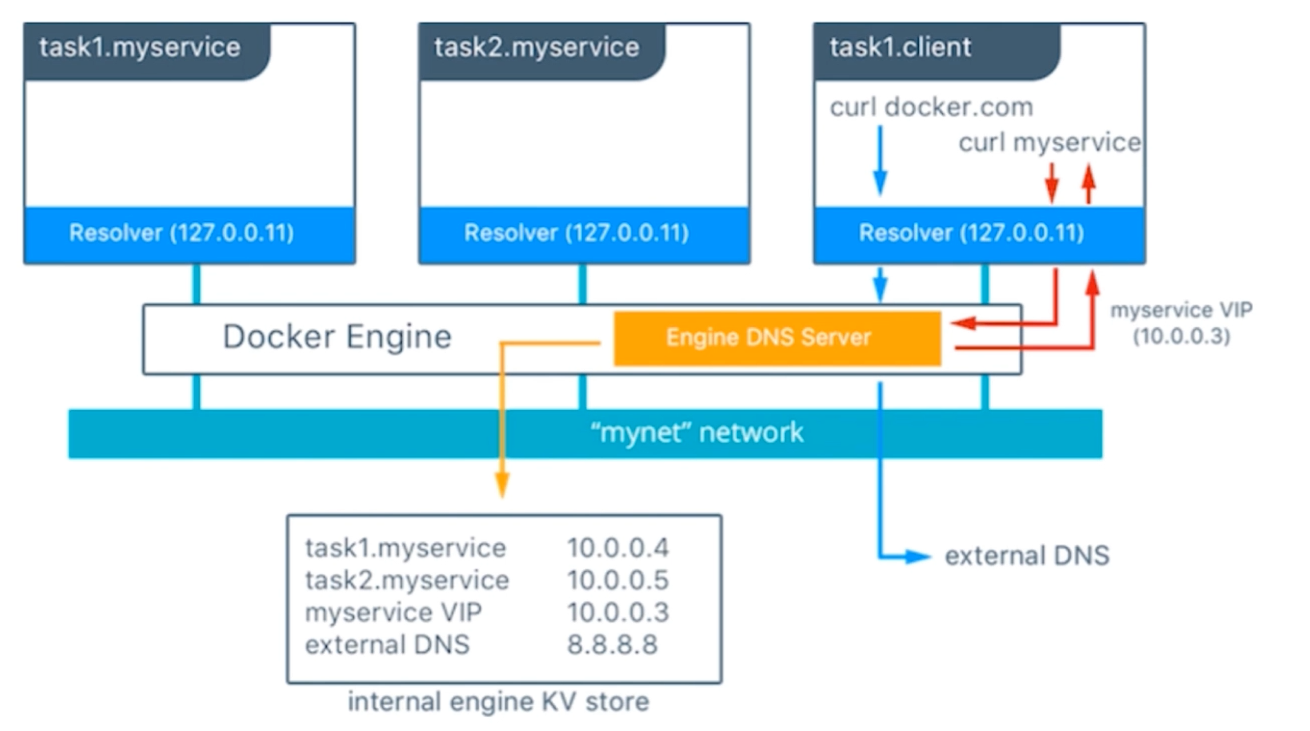

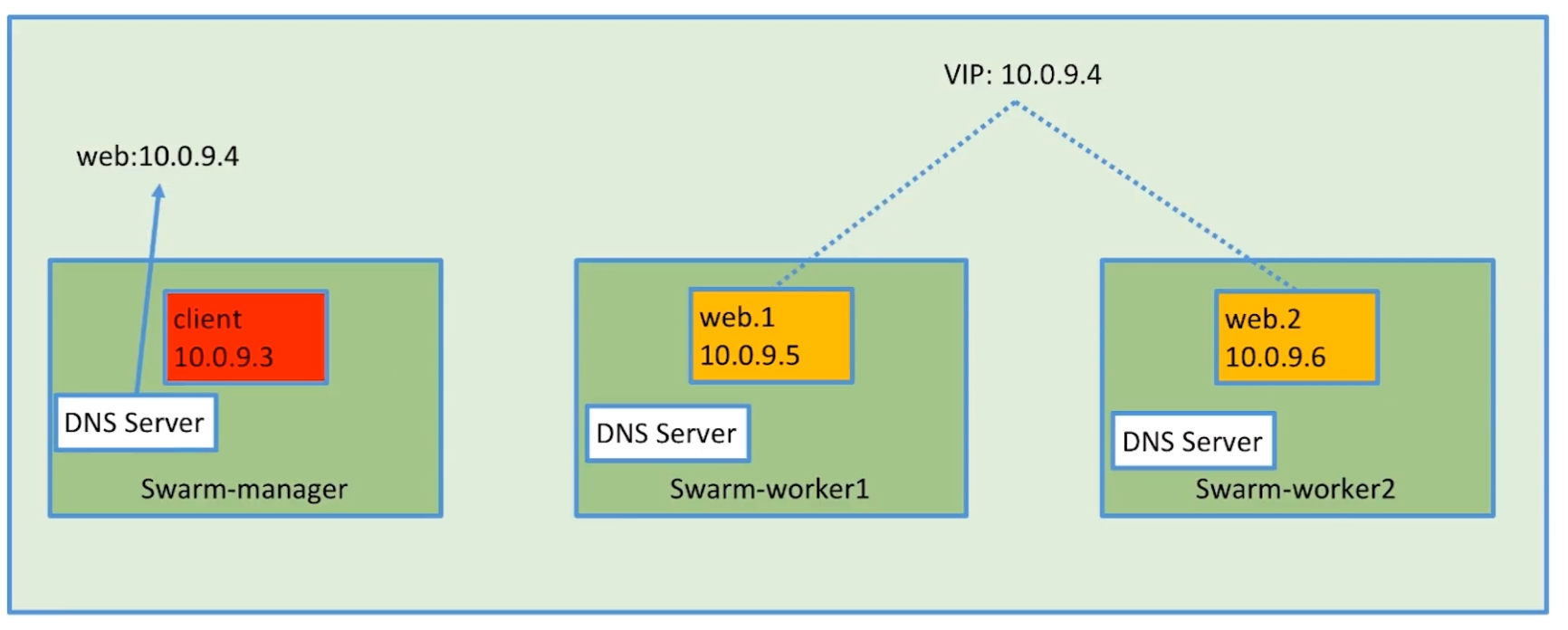

无论是 docker-compose 还是 docker swarm 创建的 service 都能通过 service 名称进行通信,这是在 Docker Engine 内部实现了一个 DNS 服务,service name 直接指向的是 service 所在 overlay 网络的虚拟 ip 地址,这个虚拟地址后面再指向容器实际的 ip 地址,因为容器的实际 ip 地址是会变化的:

- service down 掉重启可能会调度到不同的 host 上了,此时 ip 地址变化

- 直接被调度到不同 host 上,ip 地址变化

- scale 多个副本进行水平扩展,此时一个 service 其实有多个实际 ip 地址

而 service 的 ip 不会变。service 的虚拟 ip 地址和容器的实际 ip 地址是通过 LVS 实现映射和负载均衡的。

¶示例说明

先启动一个 whoami 的服务,这个服务会在 8000 端口运行,当访问它的时候会返回主机名。然后再起一个名为 client 的 busybox 的服务,后续会在 busybox 里面尝试 ping whoami 服务。

[root@swarm-manager ~]# docker service create --name whoami -p 8000:8000 --network demo -d jwilder/whoami

j0d5g1wbfckk2jdtqb3fnt00g

[root@swarm-manager ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

j0d5g1wbfckk whoami replicated 1/1 jwilder/whoami:latest *:8000->8000/tcp

[root@swarm-manager ~]# docker service ps whoami

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

ireyqp5j9gj3 whoami.1 jwilder/whoami:latest swarm-worker1 Running Running 3 minutes ago

[root@swarm-manager ~]# curl 127.0.0.1:8000

I'm 7d6a8c51c9d5

[root@swarm-manager ~]# docker service create --name client -d --network demo busybox sh -c "while true;do sleep 60;done"

ss6z96qypr8ezsubmy51jgeej

[root@swarm-manager ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

ss6z96qypr8e client replicated 1/1 busybox:latest

j0d5g1wbfckk whoami replicated 1/1 jwilder/whoami:latest *:8000->8000/tcp

docker service ps 查看到 busybox 服务是在本机运行的,直接进行 busybox 服务 ping whoami 服务,得到返回 ip 地址是 10.0.1.13:

[root@swarm-manager ~]# docker service ps client

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

we6rr1uirf0t client.1 busybox:latest swarm-manager Running Running 24 seconds ago

[root@swarm-manager ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

01f1dc5f93d0 busybox:latest "sh -c 'while true;d…" About a minute ago Up About a minute client.1.we6rr1uirf0tbin4dr4ftt7fk

[root@swarm-manager ~]# docker exec -it 01f1dc5f93d0 sh

/ # ping whoami

PING whoami (10.0.1.13): 56 data bytes

64 bytes from 10.0.1.13: seq=0 ttl=64 time=0.339 ms

64 bytes from 10.0.1.13: seq=1 ttl=64 time=0.189 ms

64 bytes from 10.0.1.13: seq=2 ttl=64 time=0.187 ms

^C

--- whoami ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.187/0.238/0.339 ms

/ #

当我们横向扩展 whoami 服务为两个容器之后,新的容器被调度在 worker2 节点,再次进入 busybox ping whoami 发现 ip 还是 10.0.0.13,也就是说服务扩展后它的 ip 没有变化:

[root@swarm-manager ~]# docker service scale whoami=2

whoami scaled to 2

overall progress: 2 out of 2 tasks

1/2: running [==================================================>]

2/2: running [==================================================>]

verify: Service converged

[root@swarm-manager ~]# docker service ps whoami

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

ireyqp5j9gj3 whoami.1 jwilder/whoami:latest swarm-worker1 Running Running 2 hours ago

z0prnv2sbzwz whoami.2 jwilder/whoami:latest swarm-worker2 Running Running 24 seconds ago

[root@swarm-manager ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

01f1dc5f93d0 busybox:latest "sh -c 'while true;d…" 2 hours ago Up 2 hours client.1.we6rr1uirf0tbin4dr4ftt7fk

[root@swarm-manager ~]# docker exec -it 01f1dc5f93d0 sh

/ # ping whoami

PING whoami (10.0.1.13): 56 data bytes

64 bytes from 10.0.1.13: seq=0 ttl=64 time=0.121 ms

64 bytes from 10.0.1.13: seq=1 ttl=64 time=0.124 ms

^C

--- whoami ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.121/0.122/0.124 ms

/ # exit

[root@swarm-manager ~]#

在 worker1 节点中查看服务 whoami 的第一个容器,发现它没有上面 ping 返回的 10.0.0.13 ip:

[root@swarm-worker1 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7d6a8c51c9d5 jwilder/whoami:latest "/app/http" 2 hours ago Up 2 hours 8000/tcp whoami.1.ireyqp5j9gj3cu6sx61fbmie1

[root@swarm-worker1 ~]# docker exec 7d6a8c51c9d5 ip a | grep 10.0.0.13

[root@swarm-worker1 ~]# docker exec 7d6a8c51c9d5 ip a | grep inet

inet 127.0.0.1/8 scope host lo

inet 10.0.0.11/24 brd 10.0.0.255 scope global eth1

inet 172.18.0.3/16 brd 172.18.255.255 scope global eth2

inet 10.0.1.14/24 brd 10.0.1.255 scope global eth0

woker2 中的新的容器也没有:

[root@swarm-worker2 ~]# docker exec 1ed0b9d6310d ip a | grep 10.0.0.13

[root@swarm-worker2 ~]# docker exec 1ed0b9d6310d ip a | grep inet

inet 127.0.0.1/8 scope host lo

inet 10.0.0.12/24 brd 10.0.0.255 scope global eth1

inet 172.18.0.3/16 brd 172.18.255.255 scope global eth2

inet 10.0.1.19/24 brd 10.0.1.255 scope global eth0

重新进入 busybox ,使用 nslookup 工具进行 DNS 查找 “whoami”,发现返回的 DNS Server 地址是 127.0.0.11:53,这个就是 docker engine 内部实现的 DNS 服务;另外,当我们查找 “tasks.whoami” 的时候,发现返回了两个 ip 地址,这两个 ip 地址就是我们上面在 work1 和 work2 中分别查询到的容器的实际 ip 之一:

[root@swarm-manager ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

01f1dc5f93d0 busybox:latest "sh -c 'while true;d…" 2 hours ago Up 2 hours client.1.we6rr1uirf0tbin4dr4ftt7fk

[root@swarm-manager ~]# docker exec -it 01f1dc5f93d0 sh

/ # nslookup -type=a whoami

Server: 127.0.0.11

Address: 127.0.0.11:53

Non-authoritative answer:

Name: whoami

Address: 10.0.1.13

/ # nslookup -type=a tasks.whoami

Server: 127.0.0.11

Address: 127.0.0.11:53

Non-authoritative answer:

Name: tasks.whoami

Address: 10.0.1.19

Name: tasks.whoami

Address: 10.0.1.14

/ # exit

再扩展一个容器的时候,此时容器数量为3:

[root@swarm-manager ~]# docker service scale whoami=3

whoami scaled to 3

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged

[root@swarm-manager ~]# docker service ps whoami

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

ireyqp5j9gj3 whoami.1 jwilder/whoami:latest swarm-worker1 Running Running 2 hours ago

z0prnv2sbzwz whoami.2 jwilder/whoami:latest swarm-worker2 Running Running 27 minutes ago

o6qod1li5v2t whoami.3 jwilder/whoami:latest swarm-manager Running Running 23 seconds ago

[root@swarm-manager ~]# docker exec ee75ad6b35b8 ip a | grep inet

inet 127.0.0.1/8 scope host lo

inet 10.0.0.13/24 brd 10.0.0.255 scope global eth1

inet 172.18.0.4/16 brd 172.18.255.255 scope global eth2

inet 10.0.1.21/24 brd 10.0.1.255 scope global eth0

然后重新进入 busybox 使用 nslookup 查看 “tasks.whoami”,查找了该容器的 ip 地址 10.0.1.21:

[root@swarm-manager ~]# docker exec -it 01f1dc5f93d0 sh

/ # nslookup -type=a tasks.whoami

Server: 127.0.0.11

Address: 127.0.0.11:53

Non-authoritative answer:

Name: tasks.whoami

Address: 10.0.1.19

Name: tasks.whoami

Address: 10.0.1.21

Name: tasks.whoami

Address: 10.0.1.14

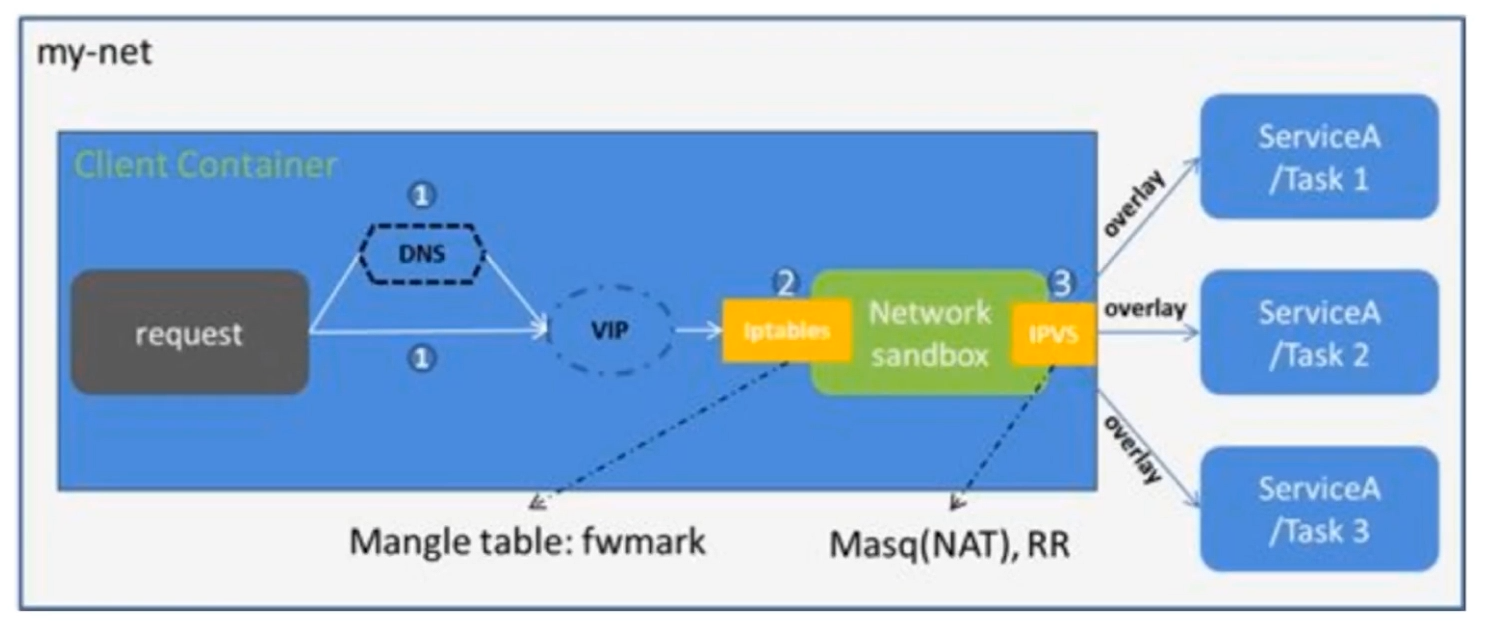

然后分别调用三次 whoami 服务,发现三次返回的主机名都不一样,所以这三次服务执行分别是三个不同的容器执行的,这就是由 LVS 负载均衡实现的:

/ # wget whoami:8000 -O - -q

I'm ee75ad6b35b8

/ # wget whoami:8000 -O - -q

I'm 1ed0b9d6310d

/ # wget whoami:8000 -O - -q

I'm 7d6a8c51c9d5

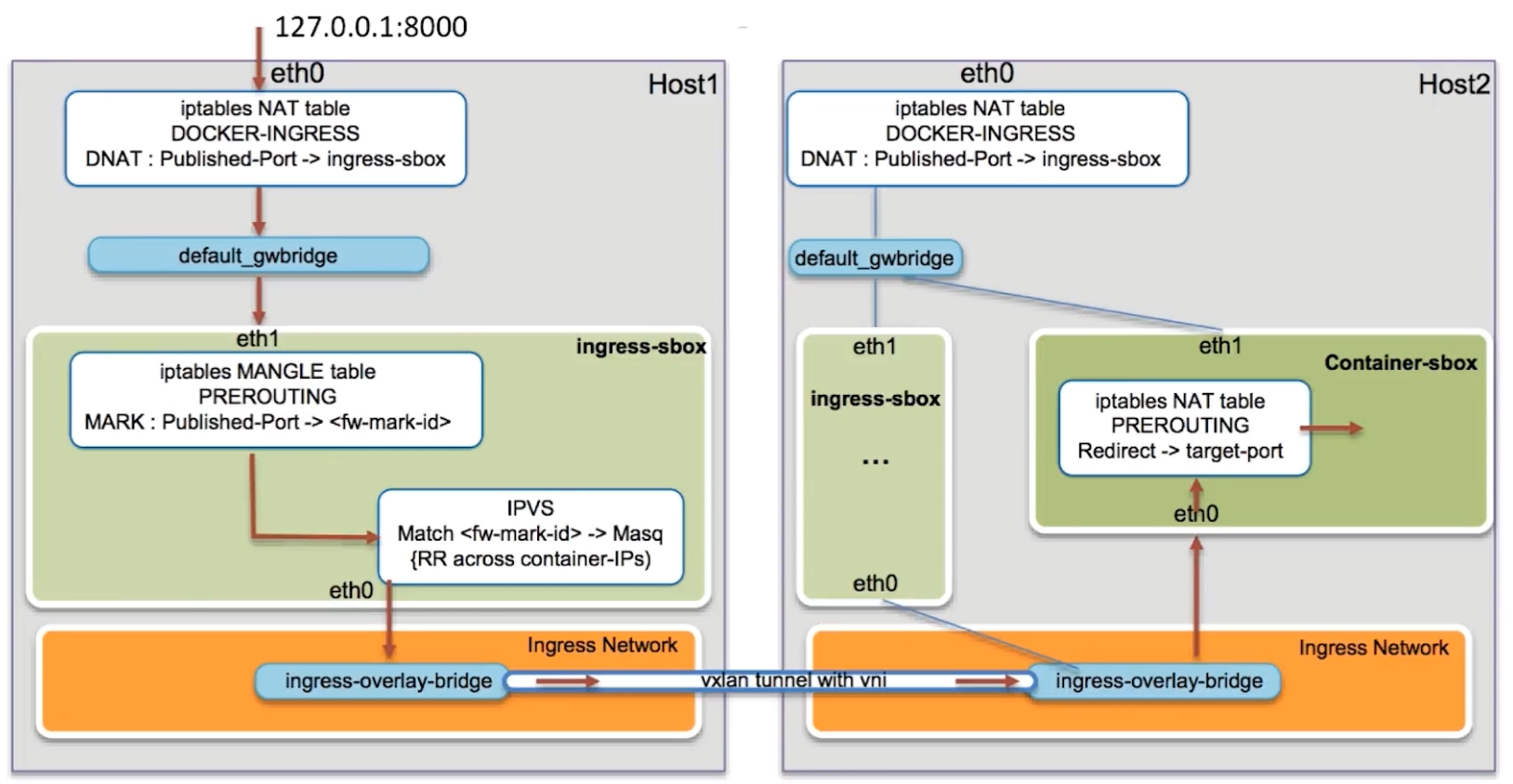

¶Routing Mesh 的两种体现

通过上面例子可以看出 docker-compose 和 docker swarm 的 overlay 网络和之前通过 etcd 实现的 overlay 网络(VXLAN tuunel)是不一样的,前者是 docker engine 内部实现的。

-

Internal:Container 和 Container 之间的访问通过 overlay 网络的 VIP(虚拟IP)进行,如果一个服务有做横向扩展,会实现负载均衡:

-

DNS + VIP + iptables + LVS

client 容器发起一个请求先走内部的 DNS 解析得到 VIP 后走 iptables 和 IPVS (LVS)根据要访问的服务和端口负载均衡到其中一个容器上:

-

-

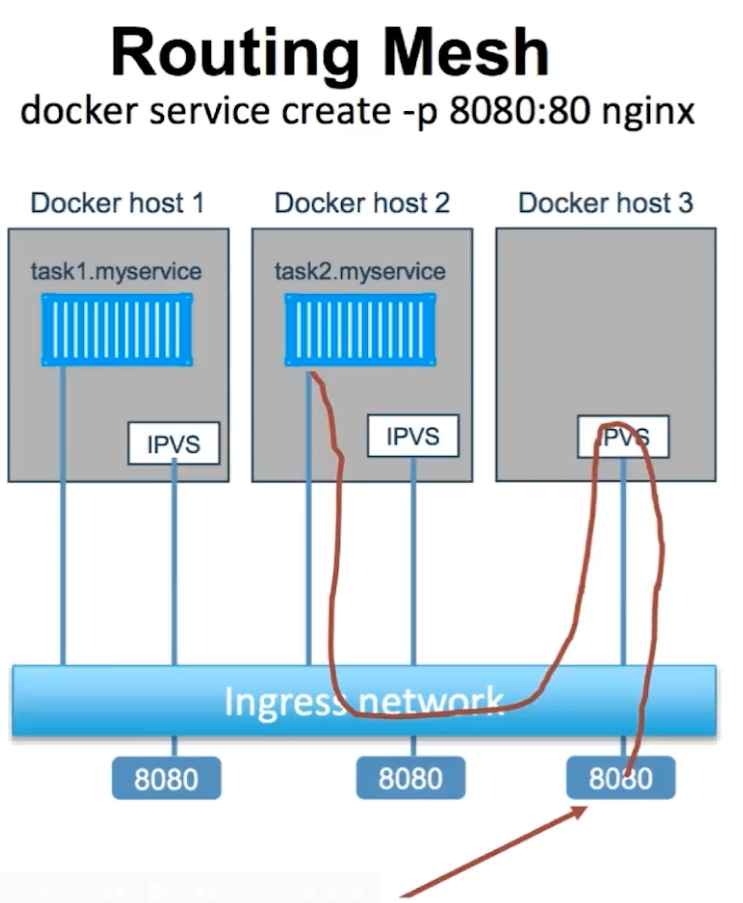

Ingress:如果服务有绑定接口,则此服务可以通过任意 swarm 节点的相应接口访问(就是上面提到的 wordpress 只有一个容器但是在三个节点上都可以访问的情况)

- 外部访问的负载均衡

- 服务端口被暴露到各个 swarm 节点

- 内部通过 IPVS 进行负载均衡

¶Ingress Network 的示例说明

查看当前 swarm 中存在的 whoami 服务的容器

[root@swarm-manager ~]# docker service ps whoami

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

ireyqp5j9gj3 whoami.1 jwilder/whoami:latest swarm-worker1 Running Running 3 hours ago

z0prnv2sbzwz whoami.2 jwilder/whoami:latest swarm-worker2 Running Running about an hour ago

[root@swarm-manager ~]# curl 127.0.0.1:8000

I'm 1ed0b9d6310d

[root@swarm-manager ~]# curl 127.0.0.1:8000

I'm 7d6a8c51c9d5

可以看到 iptables nat 端口映射规则表中最后有一条 DOCKER-INGRESS 规则链,其中有一条 DNAT 转发规则,将访问 tcp、8000端口的数据包转发到 172.18.0.2:8000 这个接口;

[root@swarm-manager ~]# iptables -nL -t nat

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

DOCKER-INGRESS all -- 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL

DOCKER all -- 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

DOCKER-INGRESS all -- 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL

DOCKER all -- 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

MASQUERADE all -- 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match src-type LOCAL

MASQUERADE all -- 172.18.0.0/16 0.0.0.0/0

MASQUERADE all -- 172.17.0.0/16 0.0.0.0/0

Chain DOCKER (2 references)

target prot opt source destination

RETURN all -- 0.0.0.0/0 0.0.0.0/0

RETURN all -- 0.0.0.0/0 0.0.0.0/0

Chain DOCKER-INGRESS (2 references)

target prot opt source destination

DNAT tcp -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:8000 to:172.18.0.2:8000

RETURN all -- 0.0.0.0/0 0.0.0.0/0

查看宿主机的所有接口,发现有一个名为 docker_gwbridge 的网桥,它的 ip 地址是 172.18.0.1/16,可以看出它和 172.18.0.2 是在同一个网段的,大体上可以猜到上面的 nat 转发是转发到了连接在这个网桥上的其中一端 Veth 设备:

[root@swarm-manager ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:4d:77:d3 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 brd 10.0.2.255 scope global noprefixroute dynamic eth0

valid_lft 70039sec preferred_lft 70039sec

inet6 fe80::5054:ff:fe4d:77d3/64 scope link

valid_lft forever preferred_lft forever

3: eth1: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:25:ee:82 brd ff:ff:ff:ff:ff:ff